Semantic Mapping and Voice User Interface Based on ORB-SLAM and YOLO for Navigating Visually Impaired Person

Main Article Content

Abstract

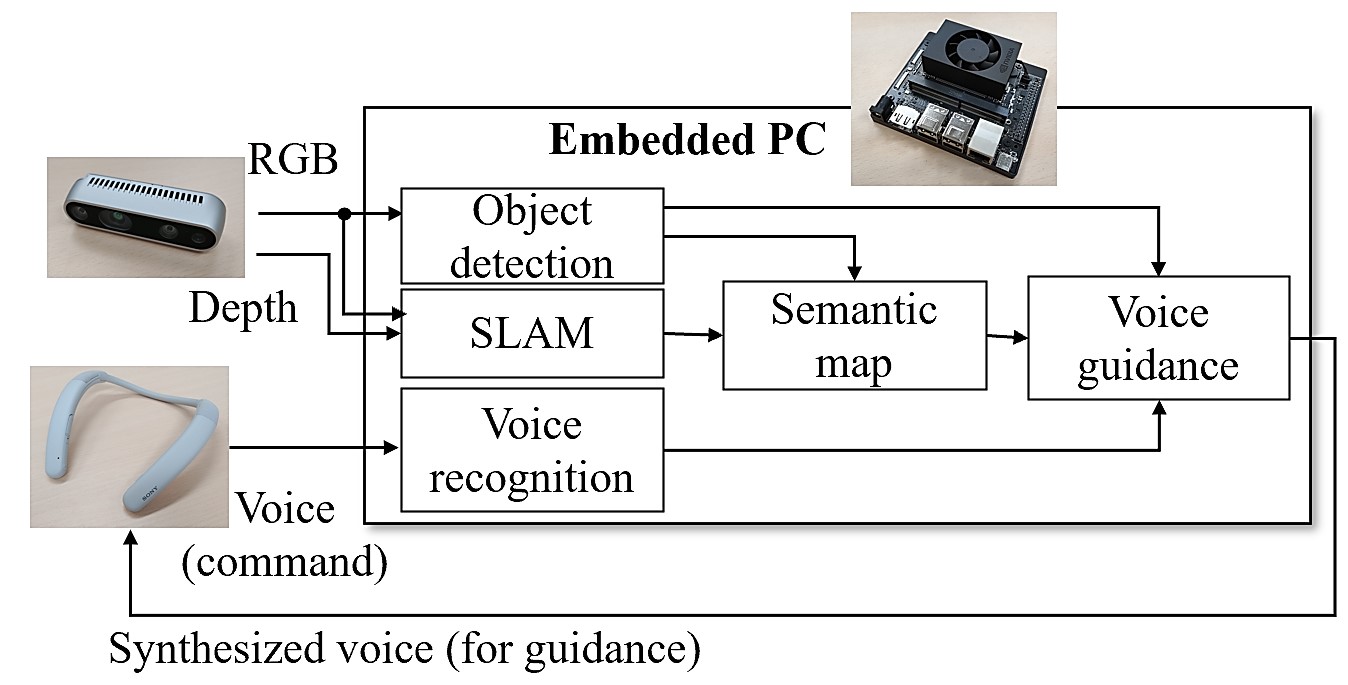

As the world's population grows and life expectancy increases, the number of visually impaired people is increasing. We developed a visual navigation map for visually impaired people to solve their life problems. This map combines the navigation map generated by Visual SLAM with the semantic information of the landmark detected by the YOLO object-detection algorithm to create a map that can be used for voice navigation and other purposes. To help visually impaired people find what they want in their daily lives, we have also developed a voice user interface based on YOLO object detection, which is a relatively lightweight voice recognition system that can help visually impaired people solve problems in their lives.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

References

Davison AJ, Reid ID, Molton ND, Stasse O. MonoSLAM: real-time single camera SLAM. IEEE Trans Pattern Anal Mach Intell. 2007;29(6):1052-1067.

Klein G, Murray D. Parallel tracking and mapping for small AR workspaces. 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality; 2007 Nov 13-16; Nara, Japan. USA: IEEE; 2007. p. 225-234.

Forster C, Zhang Z, Gassner M, Werlberger M, Scaramuzza D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans Robot. 2017;33(2):249-265.

Loo SY, Amiri AJ, Mashohor S, Tang SH, Zhang H. CNN-SVO: improving the mapping in semi-direct visual odometry using single-image depth prediction. 2019 International Conference on Robotics and Automation (ICRA); 2019 May 20-24; Montreal, Canada. USA: IEEE; 2019. p. 5218-5223.

Mur-Artal R, Montiel JMM, Tardos JD. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans Robot. 2015;31(5):1147-1163.

Mur-Artal R, Tardos JD. ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans Robot. 2017;33(5):1255-1262.

McCormac J, Handa A, Davison A, Leutenegger S. SemanticFusion: Dense 3D semantic mapping with convolutional neural networks. 2017 IEEE International Conference on Robotics and Automation (ICRA); 2017 May 29 - Jun 3; Singapore. USA: IEEE; 2017. p. 4628-4635.

Tateno K, Tombari F, Laina I, et al. CNN-SLAM: real-time dense monocular SLAM with learned depth prediction. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 Jul 21-26; Honolulu, USA. USA: IEEE; 2017. p. 6565-6574.

Rosinol A, Abate M, Chang Y, Carlone L. Kimera: an open-source library for real-time metric-semantic localization and mapping. 2020 IEEE International Conference on Robotics and Automation (ICRA); 2020 May 31 - Aug 31; Paris, France. USA: IEEE; 2020. p. 1689-1696.

Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27-30; Las Vegas, USA. USA: IEEE; 2016. p. 779-788.

Hornung A, Wurm KM, Bennewitz M, Stachniss C, Burgard W. OctoMap: an efficient probabilistic 3D mapping framework based on octrees. Auton Robot. 2013;34(3):189-206.

Oruh J, Viriri S, Adegun A. Long short-term memory recurrent neural network for automatic speech recognition. IEEE Access. 2022;10:30069-30079.