Research on Communication Analysis between Vehicles and Pedestrians at Unsignalized Crosswalks Using Online Video

Main Article Content

Abstract

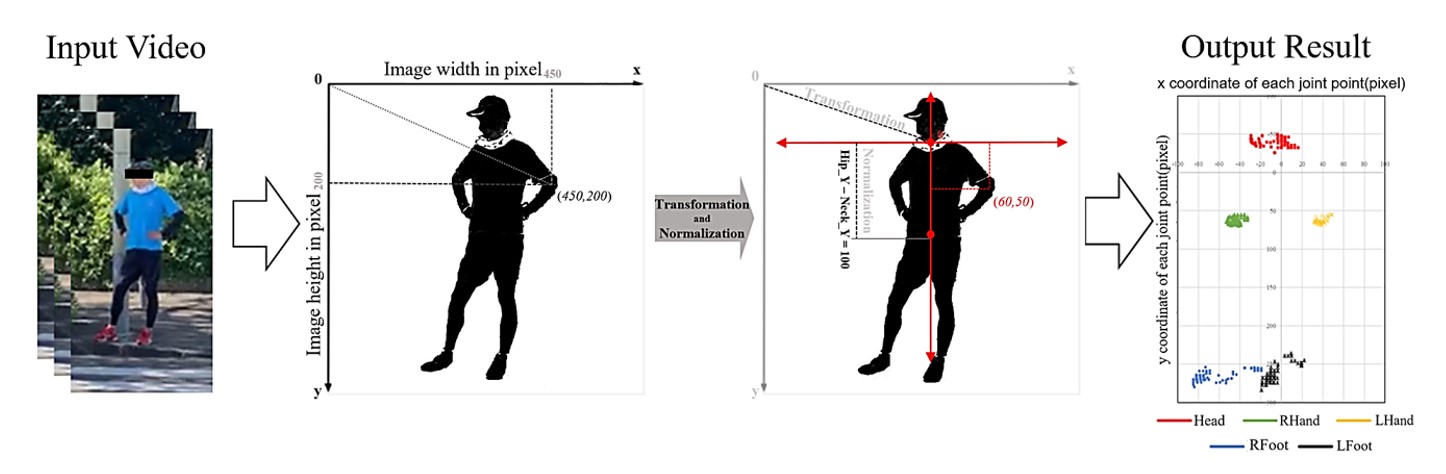

In recent years, considerable attention has been paid to research on communication in self-driving vehicles. However, few studies have quantitatively evaluated and clarified the relationship between modern vehicles and pedestrians. Additionally, communication methods and gestures are perceived differently in different countries and regions. In the past, quantitative research on communication that differed across countries and regions often required research teams to travel across countries to conduct research, which entailed enormous costs and a heavy burden. In this study, we propose an online video-based pedestrian behavior analysis and compare pedestrian communication in Japan and U.S. based on a questionnaire survey. Results with the proposed algorithm reveal that Japanese pedestrians tend to use eye contact as a form of communication at unsignalized crosswalks, whereas American pedestrians tend to use hand-raising movements. Moreover, the gesture choices of pedestrians in both countries vary based on the strength of their authority on the road.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

References

Rasouli A, Tsotsos JK. Autonomous vehicles that interact with pedestrians: a survey of theory and practice. IEEE Trans Intell Transp Syst. 2020;21(3):900-918.

Domeyer JE, Lee JD, Toyoda H. Vehicle automation–other road user communication and coordination: theory and mechanisms. IEEE Access. 2020;8:19860-19872.

Risto M, Emmenegger C, Vinkhuyzen E, Cefkin M, Hollan J. Human-vehicle interfaces: the power of vehicle movement gestures in human road user coordination. The Ninth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design; 2017 Jun 26-29; Iowa, United State. p. 186-192.

Zhuang X, Wu C. Pedestrian gestures increase driver yielding at uncontrolled mid-block road crossings. Accid Anal Prev. 2014;70:235-244.

Rothenbücher D, Li J, Sirkin D, Mok B, Ju W. Ghost driver: a field study investigating the interaction between pedestrians and driverless vehicles. 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN); 2016 Aug 26-31; New York, USA. USA: IEEE; 2016. p. 795-802.

Currano R, Park SY, Domingo L, Garcia-Mancilla J, Santana-Mancilla PC, Gonzalez VM, et al. ¡Vamos! observations of pedestrian interactions with driverless cars in Mexico. Proceedings of the 10th International conference on automotive user interfaces and interactive vehicular applications; 2018 Sep 23-25; Toronto, Canada. p. 210-220.

Li J, Currano R, Sirkin D, Goedicke D, Tennent H, Levine A, et al. On-road and online studies to investigate beliefs and behaviors of Netherlands, US and Mexico pedestrians encountering hidden-driver vehicles. 15th ACM/IEEE International Conference on Human-Robot Interaction (HRI); 2020 Mar 23-26; Cambridge, United Kingdom. USA: IEEE; 2020. p. 141-149.

Gui X, Toda K, Seo SH, Eckert FM, Chang CM, Chen XA, et al. A field study on pedestrians’ thoughts toward a car with gazing eyes. Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (CHI EA '23); 2023 Apr 23-28; Hamburg, Germany. p. 1-7.

Chang CM, Toda K, Gui X, Seo SH, Igarashi T. Can eyes on a car reduce traffic accidents?. Proceedings of the 14th International conference on automotive user interfaces and interactive vehicular applications; 2022 Sep 17-20; Seoul, Republic of Korea. p. 349-359.

Gui X, Toda K, Seo SH, Chang CM, Igarashi T. “I am going this way”: Gazing eyes on self-driving car show multiple driving directions. Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications; 2022 Sep 17-20; Seoul, Republic of Korea. p. 319-329.

Holzbock A, Kern N, Waldschmidt C, Dietmayer K, Belagiannis V. Gesture recognition with keypoint and radar stream fusion for automated vehicles. Computer Vision–ECCV 2022 Workshops; 2022 Oct 23-27; Tel Aviv, Israel. p. 570-584.

Patterson ML, Iizuka Y, Tubbs ME, Ansel J, Tsutsumi M, Anson J. Passing encounters east and west: comparing Japanese and American pedestrian interactions. J Nonverbal Behav. 2007;31(3):155-166.

Wolf I. The interaction between humans and autonomous agents. In: Maurer M, Gerdes J, Lenz B, Winner H, editors. Autonomous driving. Berlin: Springer; 2016. p. 103-124.

Dong J, Shuai Q, Zhang Y, Liu X, Zhou X, Bao H. Motion capture from internet videos. 16th European Conference on Computer Vision; 2020 Aug 23-28; Virtual platform. p. 210-227.

Taniguchi A, Yoshimura T, Ishida H. A study on generation of cooperative behavior by communication between vehicles and pedestrians/bicycles. J Jpn Soc Civ Eng. Ser. D3 (Infrastructure Planning and Management) 2012;68(5):1115-1122. (In Japanese)

BUSted Los Angeles. BUSted! Crosswalk Sting at Western & Serrano, Koreatown [Internet]. 2019 [cited 2022 Aug 25]. Available from: https://youtu.be/BsKmw_bmi2k.

BUSted Los Angeles. BUSted! Los Angeles - Afternoon West Hollywood Crosswalk Sting - Santa Monica Blvd [Internet]. 2019 [cited 2022 Aug 25]. Available from: https://youtu.be/Q58JaCRKMHo.

Cao Z, Simon T, Wei SE, Sheikh Y. Realtime multi-person 2D pose estimation using part affinity fields. IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 Jul 21-26; Honolulu, USA. USA: IEEE; 2017. p. 1302-1310.

Nakai M, Tsunoda Y, Hayashi H, Murakoshi H. Prediction of basketball free throw shooting by openpose. In: Kojima K, Sakamoto M, Mineshima K, Satoh K, editors. New Frontiers in Artificial Intelligence. JSAI-isAI 2018. Cham: Springer; 2018. p. 435-446.

Thomanek R, Rolletschke T, Platte B, Hosel C, Roschke C, Manthey R, et al. Real-time activity detection of human movement in videos via smartphone based on synthetic training data. IEEE Winter Applications of Computer Vision Workshops (WACVW); 2020 Mar 1-5; Snowmass, USA. USA: IEEE; 2020. p. 160-164.