Enhanced Running Spectrum Analysis for Robust Speech Recognition Under Adverse Conditions: A Case Study on Japanese Speech

Main Article Content

Abstract

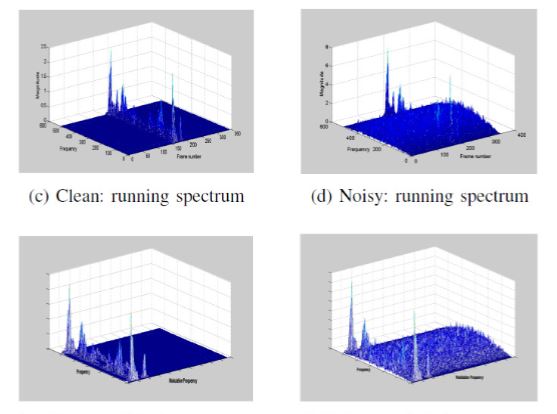

In any real environment, noises degrade the performance of Automatic Speech Recognition (ASR) systems. Additionally, in the case of similar pronunciations, it is not easy to realize a high accuracy of recognition. From this point of view, our work envisions an enhanced algorithm processing a speech modulation spectrum, such as Running Spectrum Analysis (RSA). It was also adequately applied to observed speech data. In the envisioned method, a modulation spectrum filtering (MSF) method directly modified the observed cepstral modulation spectrum by a Fourier transform of the cepstral time frequency. The method and experiments carried out for various passbands had favorable results that showed an improvement of about 1-4 % in recognition accuracy

compared to conventional methods.

Article Details

References

M. Watanabe, H. Tsutsui and Y. Miyanaga,“Robust speech recognition for similar pronunciation phrases using MMSE under noise environments,” Proc. 13th International Symposium on Communications and Information Technologies (ISCIT), Surat Thani,

pp.802-807, 2013.

J. Tierney, “A study of LPC analysis of speech in additive noise,” IEEE Trans. on Acoustic. Speech, and Signal Process., vol. ASSP-28, no. 4, pp. 389-397, Aug. 1980.

S. Kay, “Noise compensation for autoregressive spectral estimation,” IEEE Trans. on Acoust., Speech, and Signal Process., vol. ASSP-28, no. 3, pp. 292-303, Jun 1980.

P. B. Patil, “Multilayered network for LPC based speech recognition,” IEEE Transactions on Consumer Electronics, vol. 44, no. 2, pp. 435-438, May 1998.

Mark G. Hall, Alan V. Oppenheim, and Alan S. Willsky,“Time-varying parametric modeling of speech,” Signal Processing, vol. 5, pp. 267-285, 1983.

S. Tanweer, A. Mobin and A. Alam,“Analysis of Combined Use of NN and MFCC for Speech Recognition,” International Journal of Computer, Electrical, Automation, Control and In formation Engineering, vol. 8, no. 9, 2014.

L. Muda, M. Begam and I. Elamvazuthi, “Voice Recopgnition Algorithm using Mel Frequency Cepstral Coefficient (MFCC) and Dynamic Time Warping (DTW) Techniques,” Journal of Computing, vol. 2, no. 3, pp. 138-143, 2010.

C. Ittichaichareon, S. Suksri, and T. Yingthawornsuk, “Speech Recognition Using MFCC,” International Conference on Computer Graphics, Simulation and Modelling (ICGSM2012),

pp. 135-138, 2012.

Anjali Bala, Abhijeet Kumar, Niddhika Birla,“Voice command recognition system based on MFCC and DTW,” International Journal of Engineering Science and Technology, vol. 2, no. 12, pp. 7335-7342, 2010.

Petr Motliˇcek, “Feature Extraction in speech coding and recognition,” Report of PhD research internship in ASP Group, OGI-OHSU, 2002,

K. Yao, K. K. Paliwal and S. Nakamura, “Model based noisy speech Recognition with Environment Parameters Estimated by noise adaptive speech Recognition with prior,” EUROSPEECH 2003-GENEVA, Switzerland, Tech. Rep., 2003.

Q. Zhu, N. Ohtsuki, Y. Miyanaga, and N. Yoshida,“Robust speech analysis in noisy environment using running spectrum filtering,” International Symposium on Communications and Information Technologies, vol. 2, pp. 995-1000, Oct. 2004.

N. Ohtsuki, Qi Zhu and Y. Miyanaga, “The effect of the musical noise suppression in speech noise reduction using RSF,” International Symposium on Communications and Information

Technologies, vol. 2, pp. 663-667, Oct. 2004.

V Tyagi, I. McCowan, H. Misra, and H. Boulard, “Mel-Cepstrum modulation spectrum (MCMS) features for Robust ASR,” in Proc. 2003 IEEE Workshop on Automatic Speech Recognition and Understanding,St. Thomas, pp. 399-404, 2003.

Dimitrios Dimitriadis,Petros Maragos, and Alexandros Potamianos, “Modulation features for Speech Recognition,” 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), May 2002.

Jeih-Weih Hung, Hsin-Ju Hsieh, and Berlin Chen, “Robust Speech Recognition via Enhancing the Complex-Valued Acoustic Spectrum in Modulation Domain,” IEEE/ACM Transactions on Audio, Speech and Language Processing, vol. 24, Issue 2, pp. 236-251, Feb. 2016.

K. Ohnuki, W. Takahashi, S. Yoshizawa, and Y. Miyanaga, “New acoustic modeling for robust recognition and its speech recognition system,” International Conference on Embedded Systems and Intelligent Technology, 2009.

S. Yoshizawa and Y. Miyanaga, “Robust recognition of noisy speech and its hardware design for real time processing.,” ECTI Trans. Elect., Eng., Electron., and Commun., vol.3, no.1, pp.

-43, Feb. 2005.

K. Ohnuki, W. Takahashi, S. Yoshizawa, and Y. Miyanaga, “Noise Robust speech features for Automatic Continuous Speech Recognition using Running Spectrum Analysis,” in: Proc. Of 2008 International Symposium on Communications and Information Technologies (ISCIT), pp. 150- 153 (October 2008).

Yiming Sun and Yoshikazu Miyanaga, “A Noise-Robust Continuous Speech Recognition System Using Block-Based Dynamic Range Adjustment,” IEICE Trans. INF. & SYST, vol.95-D,

no.3, March 2012.

T. Chi, Y. Gao, M. C. Guyton, P. Ru and S. Shamma, “Spectro-temporal modulation transfer functions and speech intelligibility,” J. Acoust. Soc. Am., 106(5), pp. 2719–2732, 1999.

Naoya Wada and Yoshikazu Miyanaga, “Robust Speech Recognition with MSC/DRA Feature Extraction on Modulation Spectrum Domain,” in Proc. Second International Symposium on Communications, Control and Signal Processing (ISCCSP), Marakech, Morocco, Mar. 2006.

M.A Anusuya and S.K. Katti, “Speech Recognition by Machine: A Review,” International Journal of Computer Science and Information Security, (IJCSIS), vol. 6. no. 3, pp. 181-205, 2009

Noboru Kanedera, Takayuki Arai, Hynek Hermansky and Misha Pavel, “On the importance of various modulation frequencies for speech recognition,” Proceedings of EUROSPEECH 97, Rhodos, Greece, Sep. 1997.

Hynek Hermansky, Eric Wan, and Carlos Avendano, “Speech enhancement based on temporal processing,” IEEE International Conference on Acoustic, Speech and Signal Processing, Detroit, Michigan, Apr.1995.

Carlos Avendano, Sarel van Vuuren and Hynek Hermansky, “On the properties of temporal processing for speech in adverse environments,” Proceedings of 1997 Workshop on Applications of Signal Processing to Audio and Acoustics, Mohonk Mountain House, New Paltz, New York,

October 18-22, 1997.

Eslam Mansour mohammed, Mohammed Shraf Sayed, Abdalla Mohammed Moselhy and Abdelaziz Alsayed Abdelnaiem, “LPC and MFCC Performance Evaluation with Artificial Neural Network for Spoken Language Identification,” International Journal of Signal Processing, Image Processing and Pattern Recognition, vol. 6, no. 3, Jun. 2013.

M. H. Moatta and M. M. Homayounpour, “A Simple but Efficient Real-Time Voice Activity Detection Algorith,” 17th European Signal Processing Conference (EUSIPCO), August 24-28, 2009.

Sherry P. Casall and Robert D. Dryden, “The Effects of Recognition Accuracy and Vocabulary Size Of A Speech Recognition System on Task Performance and User Acceptance,” Industrial

Engineering and Operations Research, 1988.