Generative Adversarial Network for DOTA2 Character Generation

Keywords:

Generative adversarial networks, deep learning, DOTA2, StyleGAN, Few-shot image generationAbstract

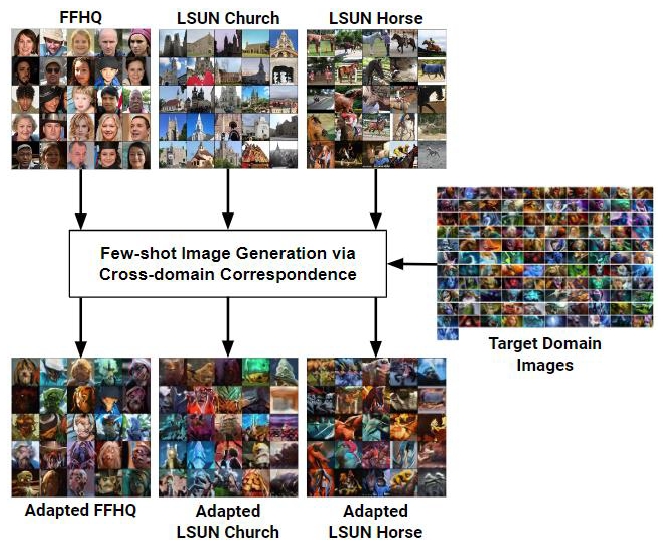

DOTA2 is a popular game in the E-sport game industry with a high market value. In particular, if developing a variety of in-game character traits, this can increase the company's revenue and player satisfaction. Many studies can demonstrate the effectiveness of Generative Adversarial Networks (GAN) applications to create cartoon images or recreate portraits. Thus, this research aims to study the effectiveness of the Style-GAN application to be used in recreating and redesigning the DOTA2 character. The form of creating a small number of shots through a few-shot Image Generation via Cross-domain Correspondence, which has the distinction of not requiring the large number of images used in training as a regular GAN and not overfitting with too much accuracy. The dataset used in training was the thumbnail of the DOTA2 hero character, with a size of 256x144 pixels, 111 images, and made up of 3 models: 1) the Source_ffhq model (a StyleGAN2 model that learned to create human faces), 2) the Church models (StyleGAN2 models that learned to create church images), and 3) the Horse models (a StyleGAN2 model that learned to create horses). Quantitative comparisons showed that models adapted from the FFHQ model could correctly adapt a person's eyes and nose to the character's eyes and nose. However, the mouth part was erased, and other models could only create half the image but still correspond to the character traits. The images created by the three models were quite dark and fresh, which also looked like the images of a DOTA2 hero. In the Fréchet Inception Distance (FID) metric results, images created with a low FID score were as close as the original DOTA2-style images. As for qualitative evaluation, it was found that the sample of players have preferred the Source_ffhq model the most, followed by Church models and Horses models, respectively. The main factors were decided by being familiar with DOTA2. Therefore, this research can guide the creation of new character images from the original DOTA2 hero character series, with the opportunity to increase revenue for the game developer company, player satisfaction, and knowledge of further game development using GAN techniques.

References

Alghifari, I. & Halim, R. (2020). Factors that influence expectancy for character growth in online games and their influence on online gamer loyalty. Journal of Economics, Business, & Accountancy Ventura,22. https://doi.org/10.14414/jebav.v22i3.1873

Auc, T.,(2013). DOTA2Hero[Photograph].Retrievedfromhttps://auct.eu/dota2-hero-images

Back, J. (2021). Fine-Tuning StyleGAN2For Cartoon Face Generation. https://arxiv.org/abs/2106.12445

Clement, J. (2021). Number of monthly active users (MAU) of DOTA 2worldwide as of February 2021. https://www.statista.com/statistics/607472/DOTA2-users-number

Creswell, J. W., & Creswell, J. D. (2018). Research design: qualitative, quantitative, and mixed methods approaches(5thed.)Los Angeles: SAGE.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative Adversarial Nets. Proceedings of the International Conference on Neural Information Processing Systems (NIPS 2014),2672–2680.

Grand,V.R.(2020). Esports Market Size, Share & Trends Analysis Report By Revenue Source (Sponsorship, Advertising, Merchandise & Tickets, Media Rights), By Region, And Segment Forecasts, 2020 -2027.https://www.grandviewresearch.com/industry-analysis/esports-market#

Guruprasad, G., Gakhar, G., & Vanusha, D. (2020). Cartoon character generation using generative Adversarial Network. International Journal of Recent Technology and Engineering, 9(1), 1–4. https://doi.org/10.35940/ijrte.f7639.059120

Hagiwara, Y., & Tanaka, T. (2020). YuruGAN: Yuru-Chara Mascot Generator Using Generative Adversarial Networks With Clustering Small Dataset. https://arxiv.org/abs/2004.08066

Heusel, M., Ramsauer, H., Unterthiner, T., & Nessler, B. (2017). GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. Proceedings of the International Conference on Neural Information Processing Systems (NIPS 2014). 6629–6640.

Jin, Y., Zhang, J., Li, M., Tian, Y., Zhu, H., & Fang, Z. (2017). Towards the Automatic Anime Characters Creation with Generative Adversarial Networks. NIPS 2017Workshop on Machine Learning for Creativity andDesign.https://nips2017creativity.github.io/doc/High_Quality_Anime.pdf

Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., & Timo, A. (2020). Analyzing and Improving the Image Quality of StyleGAN. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2020).https://doi.org/10.1109/CVPR42600.2020.00813

Karras, T., Laine, S., & Aila, T. (2021). A style-based generator architecture for Generative Adversarial Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(12), 4217–4228. https://doi.org/10.1109/tpami.2020.2970919

Krohn, J., Beyleveld, G., & Bassens, A. (2020). Deep Learning Illustrated: A Visual, Interactive Guide to Artificial Intelligence. Boston: Addison-Wesley.

Kynkäänniemi, T., Karras, T., Aittala, M., Aila, T., & Lehtinen, J. (2022). The Role of ImageNet Classes in Fréchet Inception Distance.arXivpreprint arXiv:2203.06026

Li, B., Zhu, Y., Wang, Y., Lin, C. W., Ghanem, B., & Shen, L. (2021). Anigan: Style-guided generative adversarial networks for unsupervised anime face generation.IEEE Transactions on Multimedia,24, 4077-4091. https://doi.org/10.1109/tmm.2021.3113786

Ojha, U., Li, Y., Lu, J., Efros, A. A., Lee, Y. J., Shechtman, E., & Zhang, R. (2021). Few-shot image generation via cross-domain correspondence.Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10743-10752.

Park, B.-W.& Lee, Kun,C. (2011). Exploring the value of purchasing online game items. Computers in HumanBehavior,27(6).https://doi.org/10.1016/j.chb.2011.06.013

Tseng, H. Y., Jiang, L., Liu, C., Yang, M. H., & Yang, W. (2021). Regularizing generative adversarial networks under limited data.Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (pp. 7921-7931).https://doi.org/10.1109/CVPR46437.2021.00783

Vavilala, V., & Forsyth, D. (2022). Controlled GAN-Based Creature Synthesis via a Challenging Game Art Dataset-Addressing the Noise-Latent Trade-Off. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. (pp. 3892-3901). https://doi.org/10.1109/wacv51458.2022.00019

Downloads

Published

Issue

Section

Categories

License

Copyright (c) 2023 Journal of Applied Science and Emerging Technology

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.