Leveraging Multi-Round Learning and Noisy Labeled Images from Online Sources for Durian Leaf Disease and Pest Classication

Main Article Content

Abstract

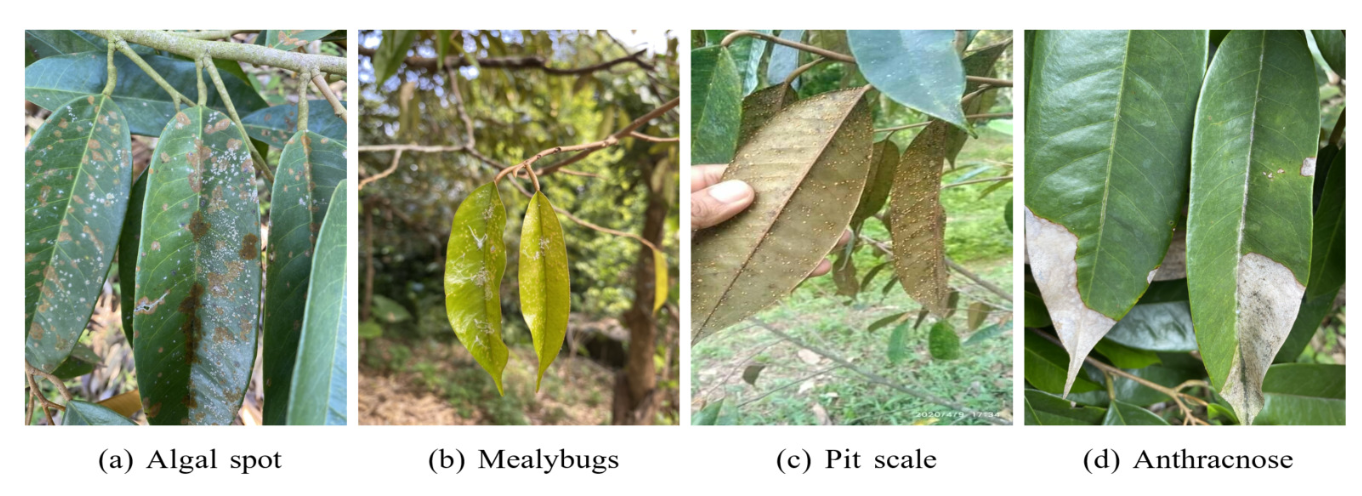

Durian has recently become a major agricultural export commodity for Southeast Asian countries. However, this plant is vulnerable to various diseases and pests, which are usually considered the main cause of poor yields and low-quality crops; leading to a huge economic loss. This work focuses on leaf diseases and pests, as their symptoms can be easily detected visually, but it is still challenging to correctly diagnose the problems. To address this difficulty, this work makes use of deep learning techniques to classify a given photo to a corresponding class of disease or pest. However, building a high-performance deep neural network model requires a substantial amount of ground-truth photos of diseases and pests on durian leaves, which are difficult and expensive to acquire. To overcome this challenge, we propose enriching the limited number of expert-labeled images with abundantly available, noisily labeled images collected from the Internet. A sample selection framework is introduced to choose noisy images for augmenting a current training set, which will be used to build a new classifier in the next learning round. We found that such a multi-round learning scheme, in which noisy photos are intuitively selected, provides complementary information to the limited ground truth, thereby enhancing the prediction accuracy on unseen examples of a classier being built by 20% at a particular learning round.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

S. D. Khirade and A. B. Patil, “Plant Disease Detection Using Image Processing,” 2015 International Conference on Computing Communication Control and Automation, Pune, India, pp. 768-771, 2015.

M. Dutot, I. Nelson and R. Tyson, “Predicting the Spread of Postharvest Disease in Stored Fruit, with Application to Apples,” Postharvest Biology and Technology, vol. 85, pp. 45–56, Nov. 2013.

Sabarre, A. Navidad, D. Torbela and J. Adtoon, “Development of Durian Leaf Disease Detection on Android Device,” International Journal of Electrical and Computer Engineering (IJECE), vol. 11, no. 6, pp. 4962–4971, 2021.

J. Al Gallenero and J. Villaverde, “Identification of Durian Leaf Disease Using Convolutional Neural Network,” 2023 15th International Conference on Computer and Automation Engineering (ICCAE), Sydney, Australia, pp. 172-177, 2023.

J. Redmon, S. Divvala, R. Girshick and A. Farhadi, “You Only Look Once: Unified, Real Time Object Detection,” 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 779-788, 2016.

L. Pang, Y. Lan, J. Guo, J. Xu, and X. Cheng, “Deeprank: A New Deep Architecture for Relevance Ranking in Information Retrieval,” in Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp. 257–266, 2017.

J. Howard and S. Ruder, “Universal Language Model Fine-tuning for Text Classification,” in Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers), pp. 328–339, 2018.

J. Devlin, M. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding,” in Proceedings of NAACL-HLT 2019, pp. 4171–4186, 2019.

W. Jing and C. S. Lim, “Multi-granularity Self-attention Mechanisms for Few-shot Learning,” ECTI-CIT Transactions, vol. 18, no. 4, pp. 522–530, 2024.

Dey, S. Biswas and L. Abualigah, “Efficient Violence Recognition in Video Streams using ResDLCNN-GRU Attention Network,” ECTI-CIT Transactions, vol. 18, no. 3, pp. 329–341, 2024.

Dey and S. Biswas, “Shot-vit: Cricket Batting Shots Classification with Vision Transformer Network,” International Journal of Engineering, vol. 37, no. 12, pp. 2463–2472, 2024.

Calvo, S. Calderon-Ramirez, J. Torrents-Barrena, E. Munoz and D. Puig, “Assessing the Impact of a Preprocessing Stage on Deep Learning Architectures for Breast Tumor Multi-class Classification with Histopathological Images,” in Latin American High Performance Computing Conf., Springer, pp. 262–275, 2019.

V. Iglovikov, A. Rakhlin, A. Kalinin and A. Shvets, “Paediatric Bone Age Assessment Using Deep Convolutional Neural Networks,” in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Springer, pp. 300–308, 2018.

X. Yu, X. Wu, C. Luo and P. Ren, “Deep Learning in Remote Sensing Scene Classification: A Data Augmentation Enhanced Convolutional Neural Network Framework,” GIScience and Remote Sensing, vol. 54, no. 5, pp. 741–758, 2017.

Tan, F. Sun, T. Kong, W. Zhang, C. Yang and C. Liu, “A Survey on Deep Transfer Learning,” in International Conference on Artificial Neural Networks, Springer, pp. 270–279, 2018.

T. Xiao, T. Xia, Y. Yang, C. Huang and X. Wang, “Learning from massive noisy labeled data for image classification,” 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, pp. 2691-2699, 2015.

Olivier Chapelle; Bernhard Sch¨olkopf; Alexander Zien, “An Augmented PAC Model for Semi-Supervised Learning,” in Semi-Supervised Learning , MIT Press, 2006, pp.397-419.

S. Calderon-Ramirez, S. Yang and D. Elizondo, “Semisupervised Deep Learning for Image Classification with Distribution Mismatch: A Survey,” IEEE Transactions on Artificial Intelligence, vol. 3, no. 6, pp. 1015–1029, 2022.

H. Song, M. Kim, D. Park, Y. Shin and J. -G. Lee, “Learning From Noisy Labels With Deep Neural Networks: A Survey,” in IEEE Transactions on Neural Networks and Learning Systems, vol. 34, no. 11, pp. 8135-8153, Nov. 2023.

K. -H. Lee, X. He, L. Zhang and L. Yang, “CleanNet: Transfer Learning for Scalable Image Classifier Training with Label Noise,” 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, pp. 5447-5456,2018.

H. Song, M. Kim and J. Lee, “SELFIE: Refurbishing Unclean Samples for Robust Deep Learning,” in Proceedings of the 36th International Conference on Machine Learning, pp. 5907–5915, 2019.

Nguyen, C. Mummadi, T. Ngo, T. Nguyen, L. Beggel and T. Brox, “SELF: Learning to Filter Noisy Labels with Self-ensembling,” in International Conference on Learning Representations, 2020.

Nettleton, A. Orriols-Puig and A. Fornells, “A Study of the Effect of Different Types of Noise on the Precision of Supervised Learning Techniques,” Artificial Intelligence Review, vol. 33, no. 4, pp. 275–306, 2010.

G. Patrini, A. Rozza, A. K. Menon, R. Nock and L. Qu, “Making Deep Neural Networks Robust to Label Noise: A Loss Correction Approach,” 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, pp. 2233-2241, 2017.

Hendrycks, M. Mazeika, D. Wilson and K. Gimpel, “Using Trusted Data to Train Deep Networks on Labels Corrupted by Severe Noise,” in Proceedings of the 32nd International Conference on Neural Information Processing Systems, pp. 10477–10486, 2018.

R. Wang, T. Liu and D. Tao, “Multiclass Learning with Partially Corrupted Labels,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 6, pp. 2568–2580, 2017.

H. Chang, E. Learned-Miller and A. McCallum, “Active Bias: Training More Accurate Neural Networks by Emphasizing High Variance Samples,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 1003–1013, 2017.

S. Reed, H. Lee, D. Anguelov, C. Szegedy, D. Erhan and A. Rabinovich, “Training Deep Neural Networks on Noisy Labels with Bootstrapping,” in International Conference on Learning Representations, 2015.

X. Ma, Y. Wang, M. Houle, S. Zhou, S. Erfani, S. Xia, S. Wijewickrema and J. Bailey, “Dimensionality-driven Learning with Noisy Labels,” in Proc. ICML, 2018.

P. Chen, J. Ye, G. Chen, J. Zhao and P. Heng, “Beyond Class-conditional Assumption: A Primary Attempt to Combat Instance-dependent Label Noise,” in The Thirty-Fifth AAAI Conference on Artificial Intelligence, pp. 11442-11450, 2021.

J. Shu, Q. Xie, L. Yi, Q. Zhao, S. Zhou, Z. Xu and D. Meng, “Meta-Weight-Net: Learning an Explicit Mapping for Sample Weighting,” in 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada, pp. 1917–1928, 2019.

Z. Wang, G. Hu and Q. Hu, “Training Noise-Robust Deep Neural Networks via Meta-Learning,” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, pp. 4523-4532, 2020.

E. Malach and S. Shalev-Shwartz, ”Decoupling When to Update from How to Update,” in 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, pp. 960–970, 2017.

B. Han, Q. Yao, X. Yu, G. Niu, M. Xu, W. Hu, I. Tsang and M. Sugiyama, “Co-teaching: Robust Training of Deep Neural Networks with Extremely Noisy Labels,” in 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montr´eal, Canada, pp.

–8537, 2018.

X. Yu, B. Han, J. Yao, G. Niu, I. Tsang and M. Sugiyama, “How Does Disagreement Help Generalization Against Label Corruption?,” in Proceedings of the 36th International Conference on Machine Learning, Long Beach, California, USA, 2019.

P. Chen, B. Liao, G. Chen and S. Zhang, “Understanding and Utilizing Deep Neural Networks Trained with Noisy Labels,” in Proceedings of the 36th International Conference on Machine Learning, Long Beach, California, USA, 2019.

J. Huang, L. Qu, R. Jia and B. Zhao, “O2U-Net: A Simple Noisy Label Detection Approach for Deep Neural Networks,” 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp. 3325-3333, 2019.

J. Han, P. Luo and X. Wang, “Deep Self-Learning From Noisy Labels,” 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp. 5137-5146, 2019.

J. Li, R. Socher and S. Hoi, “DivideMix: Learning with Noisy Labels as Semi-supervised Learning,” in International Conference on Learning Representations, 2020.

D. Berthelot, N. Carlini, I. Goodfellow, N. Papernot, A. Oliver and C. Raffel, “MixMatch: A Holistic Approach to Semi-supervised Learning,” in Proceedings of the 33rd International Conference on Neural Information Processing Systems,pp. 5050–5056, 2019.

M. Pathan, N. Patel, H. Yagnik and M. Shah, “Artificial Cognition for Applications in Smart Agriculture: A Comprehensive Review,” Artificial Intelligence in Agriculture, vol. 4, pp. 81–95, 2020.

R. Abbasi, P. Martinez and R. Ahmad, “Crop Diagnostic System: A Robust Disease Detection and Management System for Leafy Green Crops Grown in an Aquaponics Facility,” Artificial Intelligence in Agriculture, vol. 10, pp. 1–12, 2023.

P. Bedi and P. Gole, “Plant Disease Detection Using Hybrid Model Based on Convolutional Autoencoder and Convolutional Neural Network,” Artificial Intelligence in Agriculture, vol. 5, pp. 90–101, 2021.

A. Paymode and V. Malode, ”Transfer Learning for Multi-crop Leaf Disease Image Classification Using Convolutional Neural Network VGG Neural Network,” Artificial Intelligence in Agriculture, vol. 6, pp. 23–33, 2022.

A. Abbas, S. Jain, M. Gour, and S. Vankudothu, “Tomato Plant Disease Detection Using Transfer Learning with C-GAN Synthetic Images,” Computers and Electronics in Agriculture, vol. 187, no. 106279, 2021.

C. Liu, H. Zhu, W. Guo, X. Han, C. Chen, and H. Wu, “EFDet: An Efficient Detection Method for Cucumber Disease under Natural Complex Environments,” Computers and Electronics in Agriculture, vol. 189, no. 106378, 2021.

M. Mathew and T. Mahesh, “Leaf-based Disease Detection in Bell Pepper Plant Using YOLO v5,” Signal, Image and Video Processing , vol. 16, pp. 841–847, 2022.

A. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto and H. Adam, “Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications,” 2017, arXiv:1704.04861.

K. Jha, A. Doshi, P. Patel and M. Shah, “A Comprehensive Review on Automation in Agriculture Using Artificial Intelligence,” Artificial Intelligence in Agriculture, vol. 2, pp. 1–12, 2019.