Multi-granularity self-attention mechanisms for few-shot Learning

Main Article Content

Abstract

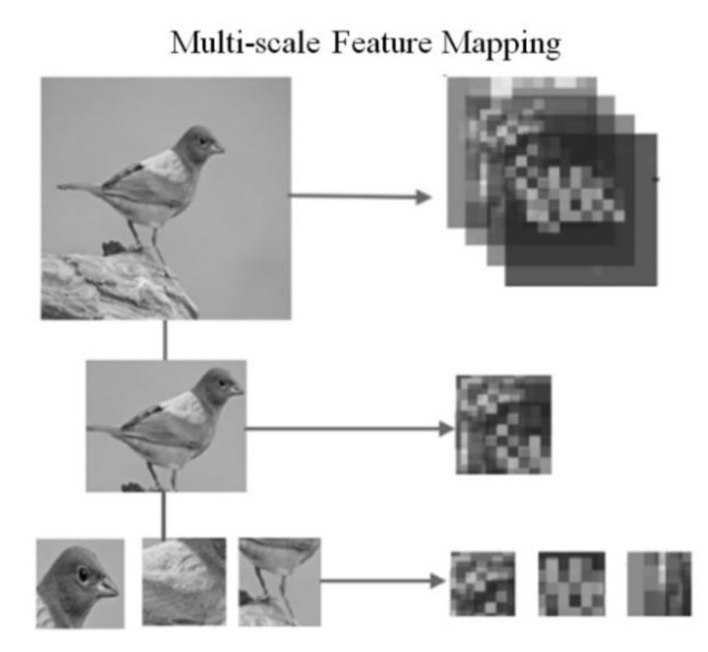

Few-shot learning aims to classify novel data categories with limited labeled samples. Although metric-based meta-learning has shown better generalization ability as a few-shot classification method, it still faces challenges in handling data noise and maintaining inter-sample distance stability. To address these issues, our study proposes an innovative few-shot learning approach to enhance image features' global and local semantic representation. Initially, our method employs a multiscale residual module to facilitate extracting multi-granularity features within images. Subsequently, it optimizes the fusion of local and global features using the self- attention mechanism inherent in the Transformer module. Additionally, a weighted metric module is integrated to improve the model's resilience against noise interference. Empirical evaluations on CIFAR-FS and MiniImageNet few-shot datasets using 5-way 1-shot and 5-way 5-shot scenarios demonstrate the effectiveness of our approach in capturing multi-level and multi-granularity image representations. Compared to other methods, our method improves accuracy by 2.63% and 1.27% for 5-shot scenes on these two datasets. The experimental results validate the efficacy of our model in significantly enhancing few-shot image classification performance.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

W. Chen, C. Si, Z. Zhang, L. Wang, Z. Wang and T. Tan, “Notice of Removal: Semantic

Prompt for Few-Shot Image Recognition,” 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, pp. 23581-23591, 2023.

S. Li, G. Yang, X. Liu, K. Huang and Y. Liu, “Few-shot object detection based on global context and implicit knowledge decoupled head,” IET Image Processing, vol. 18, no. 6, pp. 1460-1474, 2024.

Z. Cheng, S. Wang, T. Xin, T. Zhou, H. Zhang and L. Shao, “Few-Shot Medical Image Segmentation via Generating Multiple Representative Descriptors,” in IEEE Transactions on Medical Imaging, vol. 43, no. 6, pp. 2202-2214, June 2024.

Y. L. Chang, T. H. Tan, W. H. Lee, L. Chang, Y. N. Chen, K. C. Fan, and M. Alkhaleefah, “Consolidated convolutional neural network for hyperspectral image classification,” Remote Sensing, vol. 14, no. 7, pp. 1571, 2022.

X. Zhang, D. Meng, H. Gouk, and T.M.Hospedales, “Shallow Bayesian meta learning for real-world few-shot recognition,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 651-660, 2021.

Y. Gu, X. Han, Z. Liu, and M. Huang, “Ppt: Pre-trained prompt tuning for few-shot learning,” arXiv preprint, arXiv:2109.04332, 2022.

X. Chao and L. Zhang, “Few-shot imbalanced classification based on data augmentation,” Multimedia Systems, vol. 29, pp. 2843–2851, 2024.

Q. Zhang, X. Yi, J. Guo, Y. Tang, T. Feng, and R. Liu, “A few-shot rare wildlife image classification method based on style migration data augmentation,” Ecological Informatics, vol. 77, 2023.

R. Das, Y.-X. Wang, and J. M. Moura, “On the importance of distractors for few-shot classification,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9030-9040, 2021.

K. Maharana, S. Mondal, and B. Nemade, “A review: Data pre-processing and data augmentation techniques,” Global Transitions Proceedings, vol. 3, no. 1, pp. 91-99, 2022.

X. Li, X. Yang, Z. Ma, and J. Xue, “Deep metric learning for few-shot image classification: a selective review,” arXiv e-prints, arXiv-2105, 2021.

T. Hospedales, A. Antoniou, P. Micaelli, and A. Storkey, “Meta-learning in neural networks: A survey,” IEEE Transactions on Pattern Analy-Multi-granularity Self-attention Mechanisms for Few-shot Learning sis and Machine Intelligence, vol. 44, no. 9, pp. 5149-5169, 2021.

J. Zhao, Y. Yang, X. Lin, J. Yang, and L. He, “Looking wider for better adaptive representation in few-shot learning,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 12, pp. 10981-10989, 2021.

D. Wang, Y. Cheng, M. Yu, X. Guo, and T. Zhang, “A hybrid approach with optimization-

based and metric-based meta-learner for few-shot learning,” Neurocomputing, vol. 349, pp. 202-211, 2019.

W. Zheng, B. Zhang, J. Lu, and J. Zhou, “Deep relational metric learning,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 12065-12074, 2021.

X. Wu, T. Thitipong, and J. Wang, “Image classification based on multi-granularity convolutional Neural network model,” the 19th International Joint Conference on Computer Science and Software Engineering (JCSSE), pp. 1-4, 2022.

Y. Wu, B. Wu, Y. Zhang, and S. Wan, “A novel method of data and feature enhancement for few-shot image classification,” Soft Computing, vol. 27, no. 8, pp. 5109-5117, 2023.

S. H. Sun, M. M. Cheng, K. Zhao, X.-Y. Zhang, M. Harandi, and P. H. S. Torr, “Res2Net: A

New Multiscale Backbone Architecture,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 2, pp. 652-662, 2021.

X. G. Wu and T. Tanprasert, “A Multi-Grained Attention Residual Network for Image Classification,” ECTI Transactions on Computer and Information Technology (ECTI-CIT), vol. 17, no. 2, pp. 215-224, 2023.

B. Dong, R. Wang, J. Wang, and L. Xue, “Multi-scale features self-enhancement network for few-shot learning,” Multimedia Tools and Applications, vol. 80, no. 25, pp. 33865-33883, 2021.

H. Chen, H. Li, Y. Li, and C. Chen, “Multi-scale adaptive task attention network for few-

shot learning,” 26th International Conference on Pattern Recognition (ICPR), pp. 4765-4771, 2022.

Y. Song, T. Wang, P. Cai, S. K. Mondal, and J. P. Sahoo, “A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities,” Computing Surveys, vol. 55, no. 13s, pp. 1-40, 2023.

W. Wang, J. Zhang, Y. Cao, Y. Shen, and D. Tao, “Towards Data-Efficient Detection Transformers,” arXiv preprint, arXiv:2203.09507, 2022.

Y. Tian, X. Zhao, and W. Huang, “Meta-learning approaches for learning-to-learn in deep learning: a survey,” Neurocomputing, vol. 494, pp. 203-223, 2022.

Z. Y. Khan and Z. Niu, “CNN with depthwise separable convolutions and combined kernels for rating prediction,” Expert Systems with Applications, vol. 170, p. 114528, 2021.

S. Khan, M. Naseer, M. Hayat, S. W. Zamir, F. ul Khan, and M. Shah, “Transformers in vision: a survey,” ACM Computing Surveys (CSUR), vol. 54, no. 10s, pp. 1-41, 2022.

X. Pan, C. Ge, R. Lu, S. Song, G. Chen, Z. Huang, and G. Huang, “On the integration of

self-attention and convolution,” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 815-825, 2022.

J. Fang, H. Lin, X. Chen, and K. Zeng, “A hybrid network of CNN and transformer for

lightweight image super-resolution,” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 1103-1112, 2022.

Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, and B. Guo, “Swin Transformer: Hier-

archical Vision Transformer using Shifted Windows,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012-10022, 2021.

W. Wang, E. Xie, X. Li, D. Fan, K. Song, D. Liang, and L. Shao, “Pvt v2: Improved base-lines with pyramid vision transformer,” Computational Visual Media, vol. 8, no. 3, pp. 415-424,2022.

C.-F. R. Cheng-Chen, Q. Fan, and R. Panda, “Crossvit: Cross-attention multiscale vision transformer for image classification,” Proceedings of the IEEE/CVF International Conferenceon Computer Vision, pp. 357-366, 2021.

F. Sung, Y. Yang, L. Zhang, T.-S. Chua, P. H. Torr, and T. M. Hospedales, “Learning to compare: relation network for few-shot learning,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1199-1208, 2018.

L. Bertinetto, J. F. Henriques, P. H. S. Torr, and A. Vedaldi, “Meta-learning with differ-

entiable closed-form solvers,” arXiv preprint, arXiv:1805.08136, 2018.

D. Chen, Y. Wang, Y. Li, F. Mao, Y. He, and H. Xue, “Self-Supervised Learning for Few-

Shot Image Classification,” ICASSP 2021, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, pp. 1745-1749, 2021.

P. Zhu, Z. Zhu, Y. Wang, J. Zhang, and S. Zhao, “Multi-granularity episodic contrastive learning for few-shot learning,” Pattern Recognition, vol. 131, p. 108820, 2022.

J. Snell, K. Swersky, and R. S. Zemel, “Prototypical networks for few-shot learning,” Advances in Neural Information Processing Systems, vol. 30, 2017.

S. W. Yoon, J. Y. Seo, and J. K. Moon, “Tapnet: Neural network augmented with task-adaptive projection for few-shot learning,” International Conference on Machine Learning, pp. 7115-7123, May 2019.

K. Sohn, H. Lee, and X. Yan, “Meta-learning with differentiable convex optimization,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10657-10665, 2019.

C. Zhang, Y. Cai, G. Lin, and C. Shen, “Deep-emd: Few-shot image classification with differentiable Earth mover’s distance and structured classifiers,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12200-12210, 2020.

Y. Chen, Z. Liu, H. Xu, T. Darrell, and X. Wang, “Meta-baseline: Exploring simple

meta-learning for few-shot learning,” Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9042-9051, 2021.

X. Wang, X. Wang, B. Jiang, and B. Luo, “Few-Shot Learning Meets Transformer: Unified Query-Support Transformers for Few-Shot Classification,” IEEE Transactions on Circuits

and Systems for Video Technology, vol. 33, no. 12, pp. 7789-7802, 2023.

J. Xie, F. Long, J. Lv, Q. Wang, and P. Li, “Joint distribution matters: Deep Brownian distance covariance for few-shot classification,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7962-7971, 2022.