Wiener Filter with Convolutional Neural Network for Noise Removal in API-Based AI Models

Main Article Content

Abstract

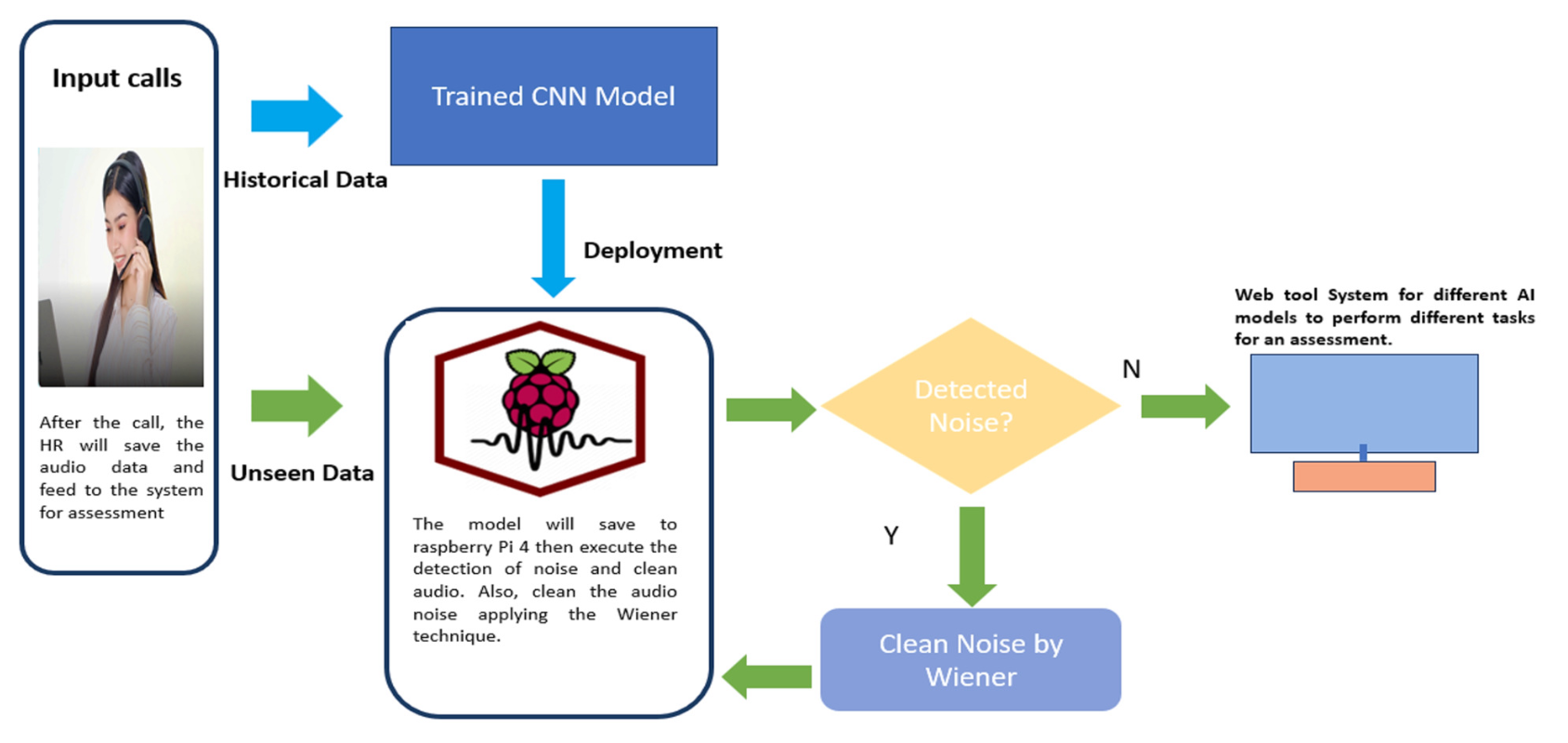

This research aims to develop a robust Application Program Interface (API)-Based Artificial Intelligence (AI) system for effective noise removal from audio signals, enhancing speech quality and intelligibility in noisy environments to be fed into different AI models to assess the applicant interview. The proposed methodology combines sophisticated signal processing techniques and noise reduction algorithms with AI models trained on clean voice data and noise patterns. To achieve this goal, we leverage two key components: the Wiener filter and a Convolutional Neural Network (CNN). The Wiener filter serves as the foundational noise reduction technique, exploiting statistical properties of the signal and the noise to suppress unwanted noise components effectively. Concurrently, CNN is integrated to classify the clean and noisy audio. In this research, the best optimizers selected, including Adam, SGD, RMSprop, Adagrad, and Adadelta are evaluated to identify the most suitable classification. The optimizers evaluated through cross-validation and hold-out validation in the same batch size (25) and epoch (25) were used. The study demonstrates that the Adam optimizer yields the best results. The epoch was optimized to 35, 75, 105, and 125 and epoch of 105 was selected with accuracy of 99.52%, Recall of 100%, F1-Score of 99.50%, and ROC_AUC of 99.99% for cross-validation while Accuracy of 98.79%, Recall of 99.21%, F1-Score of 98.81%, and ROC_AUC of 99.54% for hold-out validation, significantly improving AI model performance. Lastly, we ensured the batch size parameter was suitable for our model by tuning it with different settings (25, 50, 75, and 125) using the optimized optimizer and epoch. The batch size of 25 yielded the best accuracy. The modeled CNN also included kernel regularization L2 to avoid overfitting.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

L. Li, T. Lassiter, J. Oh and M. K. Lee, “Algorithmic Hiring in Practice: Recruiter and HR Professional’s Perspectives on AI Use in Hiring,” in AIES 2021 - Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Association for Computing Machinery, Inc, pp. 166–176, Jul. 2021.

L. Armstrong, J. Everson and A. J. Ko, “Navigating a Black Box: Students’ Experiences and Perceptions of Automated Hiring,” in Proceedings of the 2023 ACM Conference on International Computing Education Research V.1, New York, NY, USA: ACM, pp. 148–158, Aug. 2023.

J. S. Black and P. van Esch, “AI-enabled recruiting: What is it and how should a manager use it?,” Business Horizons, vol. 63, no. 2, pp. 215–226, Mar. 2020.

K. Priya, S. M. Mansoor Roomi, P. Shanmugavadivu, M. G. Sethuraman and P. Kalaivani, “An Automated System for the Assesment of Interview Performance through Audio & Emotion Cues,” 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, pp. 1049-1054, 2019.

P. Senarathne, M. Silva, A. Methmini, D. Kavinda and S. Thelijjagoda, “Automate Traditional Interviewing Process Using Natural Language Processing and Machine Learning,” 2021 6th International Conference for Convergence in Technology (I2CT), Maharashtra, India, pp. 1-6, 2021.

J. S. Queiroz and E. L. Feitosa,“A WebBrowser Fingerprinting Method Based on the Web Audio API,” Gerontologist, vol. 59, no. 3, pp. 1106–1120, Jun. 2019.

H. Lindetorp and K. Falkenberg, “Putting Web Audio API to the test: Introducing WebAudioXML as a pedagogical platform,” 2021. [Online]. Available: http://nobelprizemuseum.se

R. B. Ibrahim, “Performance Analysis: AI-based VIST Audio Player by Microsoft Speech API,” Kurdistan Journal of Applied Research, pp. 21–28, Jul. 2021.

N. Anggraini, A. Kurniawan, L. K. Wardhani and N. Hakiem, “Speech Recognition Application for the Speech Impaired using the Android-based Google Cloud Speech API,” TELKOMNIKA (Telecommunication Computing Electronics and Control), vol. 16, no. 6, p. 2733, Dec. 2018.

R. Cutler et al., “ICASSP 2022 Acoustic Echo Cancellation Challenge,” ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, Singapore, pp. 9107-9111, 2022.

N. Alamdari, S. Yaraganalu and N. Kehtarnavaz, “A Real-Time Personalized Noise Reduction Smartphone App for Hearing Enhancement,” 2018 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, pp. 1-5, 2018.

S. Liu, G. Keren, E. Parada-Cabaleiro, and B. Schuller, “N-HANS: A neural network-based toolkit for in-the-wild audio enhancement,” Multimedia Tools and Applications, vol. 80, no. 18, pp. 28365–28389, Jul. 2021.

T. V. and T. R. S. and K. C. and J. P. Senthilkumar Radha and Raghavasimhan, “RealTimeSuppression of Non-stationary Noise for Web-Based Calling Applications,” in ICT Systems and Sustainability, S. and J. A. Tuba Milan and Akashe, Ed., Singapore: Springer Nature Singapore, pp. 131–140, 2023.

M. A. Kumar and K. M. Chari, “Noise Reduction Using Modified Wiener Filter in Digital Hearing Aid for Speech Signal Enhancement,” Journal of Intelligent Systems, vol. 29, no. 1, pp. 1360–1378, Jan. 2020.

M. C. R. Kumar and M. P. Chitra, “Implementation of Modified Wiener Filtering in Frequency Domain in Speech Enhancement,” 2022. [Online]. Available: www.ijacsa.thesai.org

S. Park and D. Park, “Low-Power FPGA Realization of Lightweight Active Noise Cancellation with CNN Noise Classification,” Electronics (Basel), vol. 12, no. 11, p. 2511, Jun. 2023.

I. Ozer, Z. Ozer, and O. Findik, “Noise robust sound event classification with convolutional neural network,” Neurocomputing, vol. 272, pp. 505–512, Jan. 2018.

L. Nanni, G. Maguolo, S. Brahnam, and M.Paci, “An Ensemble of Convolutional Neural Networks for Audio Classification,” Applied Sciences, vol. 11, no. 13, p. 5796, Jun. 2021.

N. F. Ali, M. Hussein, F. Awwad and M. Atef, “Convolutional Autoencoder for RealTime PPG Based Blood Pressure Monitoring Using TinyML,” 2023 International Conference on Microelectronics (ICM), Abu Dhabi, United Arab Emirates, pp. 41-45, 2023.

D. Ribas, A. Miguel, A. Ortega, and E. Lleida, “Wiener Filter and Deep Neural Networks: A Well-Balanced Pair for Speech Enhancement,” Applied Sciences (Switzerland), vol. 12, no. 18, Sep. 2022.