SETA- Extractive to Abstractive Summarization with a Similarity-Based Attentional Encoder-Decoder Model

Main Article Content

Abstract

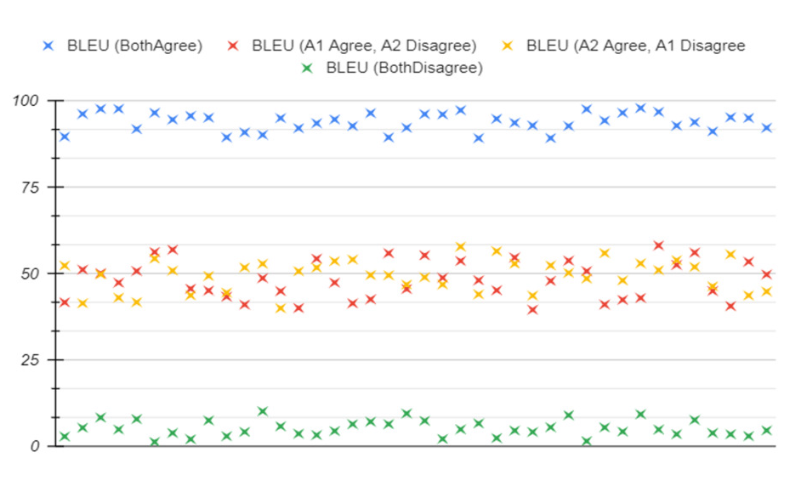

Summarizing information provided within tables of scientific documents has always been a problem. A system that can summarize this vital information, which a table encapsulates, can provide readers with a quick and straightforward solution to comprehend the contents of the document. To train such systems, we need data, and finding a quality one is tricky. To mitigate this challenge, we developed a high-quality corpus that contains both extractive and abstractive summaries derived from tables, using a rule-based approach. This dataset was validated using a combination of automated and manual metrics. Subsequently, we developed a novel Encoder-Decoder framework, along with attention, to generate abstractive summaries from extractive ones. This model works on a mix of extractive summaries and inter-sentential similarity embeddings and learns to map them to corresponding abstractive summaries. On experimentation, we discovered that our model addresses the saliency factor of summarization, an aspect overlooked by previous works. Further experiments show that our model develops coherent abstractive summaries, validated by high BLEU and ROUGE scores.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

V. Gupta and G. S. Lehal, “A Survey of Text Summarization Extractive Techniques,” Journal of Emerging Technologies in Web Intelligence, vol 2, no. 3, pp. 258–268, 2010.

W. S. El-Kassas, C. R. Salama, A. A. Rafea and H. K. Mohamed, “Automatic Text Summarization: A Comprehensive Survey,” Expert Systems with Applications, vol. 165, pp.113679, 2021.

C. An, M. Zhong, Y. Chen, D. Wang, X. Qiu and X. Huang, “Enhancing Scientific Papers Summarization with Citation Graph,” Proceedings of the AAAI Conference on Artificial Intelligence, vol 35, pp. 12498–12506, 2021.

X. Chen, M. Li, S. Gao, R. Yan, X. Gao and X. Zhang, “Scientific paper extractive summarization enhanced by citation graphs,” Proceedings of 2022 Conference on Empirical Methods in Natural Language Processing, pp. 4053–4062, 2022.

S. Liu, J. Cao, R. Yang and Z. Wen, “Long Text and Multi-Table Summarization: Dataset and Method,” arXiv preprint, arXiv:2302.03815, 2023..

C.-Y. Lin, “Rouge: A Package for Automatic Evaluation of Summaries,” Journal of Text Summarization Branches Out, pp. 74–81, 2004.

Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “BLEU: A Method for Automatic Evaluation of Machine Translation,” Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp.311–318, 2002.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser and I. Polosukhin, “Attention is all you need,” arXiv preprint, arXiv:1706.03762v7, 2017.

R. Nallapati, B. Zhou and M. Ma, “Classify or select: Neural architectures for extractive document summarization,” arXiv preprint, arXiv:1611.04244, 2016.

C. Jianpeng, and M. Lapata, “Neural summarization by extracting sentences and words,” arXiv preprint, arXiv:1603.07252, 2016.

Y.C. Chen, and M. Bansal, “Fast abstractive summarization with reinforce-selected sentence rewriting,” arXiv preprint, arXiv:1805.11080, 2018.

A. Rush, S. Chopra, and J. Weston, “A neural attention model for abstractive sentence summarization,” arXiv preprint, arXiv:1509.00685, 2015.

R. Nallapati, B. Zhou, C. Gulcehre, and B. Xiang, “Abstractive text summarization using sequence-to-sequence rnns and beyond,” arXiv preprint, arXiv:1602.06023, 2016.

G. Jiatao, Z. Lu, H. Li, and V. OK Li, “Incorporating copying mechanism in sequence-to-sequence learning,” arXiv preprint, arXiv:1603.06393, 2016.

C. Gulcehre, S. Ahn, R. Nallapati, B. Zhou, and Y. Bengio, “Pointing the unknown words,” arXiv preprint, arXiv:1603.08148, 2016.

P. Liang, M. I. Jordan, and D. Klein, “Learning semantic correspondences with less supervision,” Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, pp. 91-99, 2009.

R. Lebret, D. Grangier and M. Auli, “Neural text generation from structured data with application to the biography domain,” arXiv preprint, arXiv:1603.07771, 2016.

P. Ratish, and M. Lapata, “Data-to-text generation with macro planning,” Transactions of the Association for Computational Linguistics, vol. 9, pp.510-527, 2021.

F. Wang, Z. Xu, P. Szekely, and M. Chen, “Robust (controlled) table-to-text generation with structure-aware equivariance learning,” arXiv preprint, arXiv:2205.03972, 2022.

T. Liu, K. Wang, L. Sha, B. Chang and Z. Sui, “Table-to-text generation by structureaware seq2seq learning,” in Proceedings of the AAAI conference on artificial intelligence, vol. 32, no. 1, 2018.

C. Li and W. Lam, “Structured Attention Networks for Table-to-Text Generation,” ACM Transactions on Information Systems (TOIS), vol. 38, no. 2, pp. 1–27, 2020.

Krishnamurthy, Jayant, Pradeep Dasigi, and Matt Gardner, “Neural semantic parsing with type constraints for semi-structured tables,” in Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pp. 1516-1526, 2017.

L. Dong, F. Wei, and C. Tan, “Neural Text Generation in Structured Data-to-Text Applications,” in Proceedings of (EMNLP-IJCNLP), pp.1321–1331, 2019.

Y. Zheng, X. Ye, Z. Lin, and Z. He, “Extractive or Abstractive? A Multimodal Framework for Table-to-Text Generation,” in Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp.1–20, 2021.

P. See, J. Liu, and C. D. Manning, “Get To The Point: Summarization with Pointer-Generator Networks,” in Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1073–1083, 2017.

R.Urbizag ́astegui,LasPosibilidadesdelaLey de Zipfen la IndizaciON AutomATica, Reporte de la Universidad California Riverside, 1999.

R.J. Williams and D. Zipser, “A learning algorithm for continually running fully recurrent neural networks,” Neural computation, vol. 1, no. 2 pp. 270-280, 1989.

M. Dey, S.K. Mahata, and D. Das, “Exploring Summarization of Scientific Tables: Analysing Data Preparation and Extractive to Abstractive Summary Generation,” International Journal for Computers & Their Applications, vol. 30, no. 4 , 2023.

G. Sharma and D. Sharma, “Automatic text summarization methods: A comprehensive review,” SN Computer Science, vol. 4, no. 1, 2022.

S. Liu, J. Cao, R. Yang and Z. Wen, “Long text and multi-table summarization: Dataset and method,” arXiv preprint, arXiv: 2302.03815, 2022.