Enhancing Text Summarization using Hybrid LSTM-GRU with Lingual Significance Relation-based Attention Mechanism Model

Main Article Content

Abstract

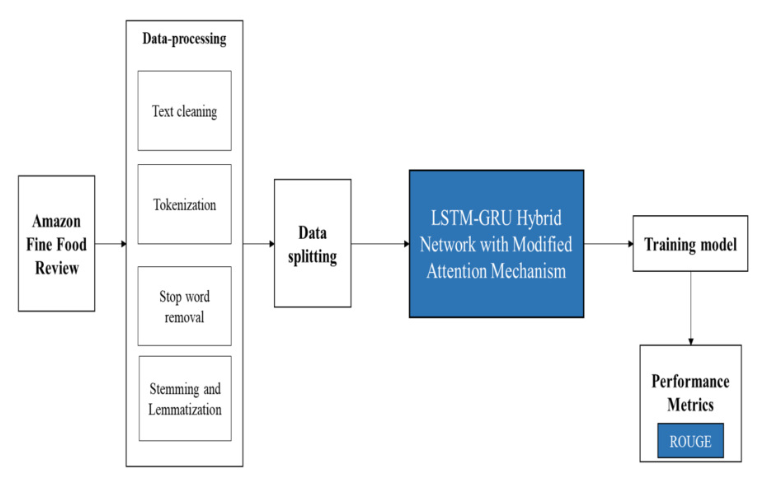

Text summarization is the process of summarizing the information of a large text into short, crisp, and concise text to analyze and extract the most imperative information from the given text. Therefore, different AI based techniques are used for summarizing the text. In order to achieve this, various AI techniques have been incorporated into the existing works. However, the prevailing methods lagged in delivering accurate text. Therefore, the proposed work employs a Hybrid LSTM-GRU (Long Short Term Model Gated Recurrent Unit) model with M-AM (Modified - Attention Mechanism). The dataset incorporated in the proposed model is amazon fine food review. Different pre-processing techniques remove unwanted and irrelevant text, such as tokenization, text cleaning, stop word removal, and stemming and lemmatization. The Proposed model employs hybrid LSTM-GRU with M-AM as it delivers faster and employs less memory consumption. Along with it, it has the potential to capture long-term dependencies as well. Further, M-AM incorporates lexical sequence measure and sentence context weight for delivering an effective model for text summarization. Therefore, the major contribution of the proposed work involves summarizing the text into a crisp and brief format for easy understanding. Finally, the performance of the proposed model is evaluated using different ROUGE, accuracy, and loss, in which ROUGE metrics obtained by the proposed model is 55.5.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

W. S. El-Kassas, C. R. Salama, A. A. Rafea, and H. K. J. E. s. w. a. Mohamed, “Automatic text summarization: A comprehensive survey,” Expert Systems with Applications, vol. 165, no. 113679, 2021.

A. Mahajani, V. Pandya, I. Maria, D. J. A. C. Sharma and C. S. RACCCS-, “A comprehensive survey on extractive and abstractive techniques for text summarization,” Ambient Communications and Computer Systems, pp. 339-351, 2019.

S. Shrenika, “Abstractive Multi Document Text Summarization of User Reviews Using Graph Generation and TF-IDF,” Perspectives in Communication, Embedded-systems and Signal-processing-PiCES, vol. 5, no. 5, pp. 54-57, 2021.

S. Sarica and J. Luo, “Stopwords in technical language processing,” PLoS ONE, vol. 16, no.8:e0254937, 2020.

D. Khyani, B. Siddhartha, N. Niveditha, and B. Divya, “An interpretation of lemmatization and stemming in natural language processing,” Journal of University of Shanghai for Science and Technology, vol. 22. no. 10, 2020.

G. Neelima et al., “Extractive text summarization using deep natural language fuzzy processing,” International Journal of Innovative Technology and Exploring Engineering (IJITEE), vol. 8, no. 6S4, pp.2278-3075, 2019.

Z. Fatima et al., “A novel approach for semantic extractive text summarization,” Applied Sciences, vol. 12, no. 9:4479, 2022.

A. Kovaˇcevi ́c and D. Keˇco, “Bidirectional LSTM networks for abstractive text summarization,” Advanced Technologies, Systems, and Applications VI, pp. 281-293, 2021

R. Srivastava, P. Singh, K. Rana, and V. J. K.B. S. Kumar, “A topic modeled unsupervised approach to single document extractive text summarization,” Knowledge-Based Systems, vol. 246, no. 108636, 2022.

N.-K. Nguyen, V.-H. Nguyen, and A.-C. Le, “A Local Attention-based Neural Networks for Abstractive Text Summarization,” in Proceedings of the 2023 5th Asia Pacific Information Technology Conference, pp. 152-159, 2023.

H. Bhathena, J. Chen, and W. Locke, “Recognizing themes in Amazon reviews through Unsupervised Multi-Document Summarization,” thisisjeffchen.com. chrome-extension://efaidnbmnnnibpcajpcglclefindmkaj/ https://thisisjeffchen.com/publication/amazon-reviews.pdf.

A. Pieta, “Use of evolution of deep neural network for text summarization,” M.S. thesis, Universitat Polit`ecnica de Catalunya, 2021.

K. Muthiah, “Automatic Coherent and Concise Text Summarization using Natural Language Processing,” M.S. thesis, Dublin, National College of Ireland, 2020.

P. M. Hanunggul and S. Suyanto, “The Impact of Local Attention in LSTM for Abstractive Text Summarization,” 2019 International Seminar on Research of Information Technology and Intelli-

gent Systems (ISRITI), Yogyakarta, Indonesia,pp. 54-57, 2019.

D. Miller, “Leveraging BERT for extractive text summarization on lectures,” arXiv preprint, arXiv:1906.04165, 2019.

R. Atanda, “Text Summarization of Customer Reviews Using Natural Language Processing,” Doctoral dissertation, Dublin, National College of Ireland, 2020.

A. K. Mohammad Masum, S. Abujar, M. A. Islam Talukder, A. K. M. S. Azad Rabby and S. A. Hossain, “Abstractive method of text summarization with sequence to sequence RNNs,” 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, pp. 1-5, 2019.

D. Debnath, R. Das and S. Rafi, “SentimentBased Abstractive Text Summarization Using Attention Oriented LSTM Model,” Intelligent Data Engineering and Analytics, pp. 199-208, 2022.

K. K. Manore, “Text Summarization of Customer Food Reviews Using Deep Learning Approach,” Doctoral dissertation, Dublin, National College of Ireland, 2022.

S. A. Tripathy and A. Sharmila, “Abstractive method-based text summarization using bidirectional long short-term memory and pointer generator mode,” Journal of applied research and technology, vol. 21, no. 1, pp. 73-86, 2023.

R. Singh, “Text Summarization using Sequence to Sequence,” Doctoral dissertation, Dublin, National College of Ireland, 2023.

K. Divya, K. Sneha, B. Sowmya and G. S. Rao, “Text summarization using deep learning,” International Research Journal of Engineering and Technology (IRJET), vol. 7, no. 5, pp. 3673-3677, 2020.

O ̈. B. Mercan, S. N. Cavsak, A. Deliahmetoglu and S. Tanberk, “Abstractive Text Summarization for Resumes With Cutting Edge NLP Transformers and LSTM,” arXiv preprint, arXiv:2306.13315, 2023.

N. Sanjabi, “Abstractive text summarization with attention-based mechanism,” M.S. Thesis, Universitat Polit`ecnica de Catalunya, 2018.

J. Sheela and B. Janet, “An abstractive summary generation system for customer reviews and news article using deep learning,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, pp. 7363-7373, 2021.

F. Boumahdi, A. Zeroual, I. Boulghiti and H. Hentabli, “Generating an Extract Summary from a Document,” Proceedings of the Third International Conference on Information Management and Machine Intelligence, pp. 103-108, 2022

M.Mohd,R.JanandM.J.E.S.w.A.Shah, “Text document summarization using word embedding,” Expert Systems with Applications, vol. 143, no. 112958, 2020.

S. Bhargav, A. Choudhury, S. Kaushik, R. Shukla and V. Dutt, “A comparison study of abstractive and extractive methods for text summarization,” Proceedings of the International Conference on Paradigms of Communication, Computing and Data Sciences, pp. 601-610, 2022.

K. Pabbi and S. C, “Opinion Summarisation using Bi-Directional Long-Short Term Memory,” 2021 Sixth International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, pp. 256-259, 2021.

K. Pabbi, C. Sindhu, I. S. Reddy and B. N. S. J. T. Abhijit, “The Use of Transformer Model in Opinion Summarisation,” Webology, vol. 18, pp. 1084-1095, 2021.

Q. Li, X. Li, B. Lee and J. J. A. S. Kim, “A hybrid CNN-based review helpfulness filtering model for improving e-commerce recommendation Service,” Applied Sciences, vol. 11, no. 18:8613, 2021.

M. Singh and V. Yadav, “Abstractive Text Summarization Using Attention-based Stacked LSTM,” 2022 Fifth International Conference on Computational Intelligence and Communication Technologies (CCICT), Sonepat, India, pp. 236-241, 2022.

R. M. Patel and A. J. Goswami, “Abstractive Text Summarization with LSTM using Beam Search Inference Phase Decoder and Attention Mechanism,” 2021 International Conference on Communication, Control and Information Sciences (ICCISc), Idukki, India, pp. 1-6, 2021.