Visual SLAM Framework with Culling Strategy Enhancement for Dynamic Object Detection

Main Article Content

Abstract

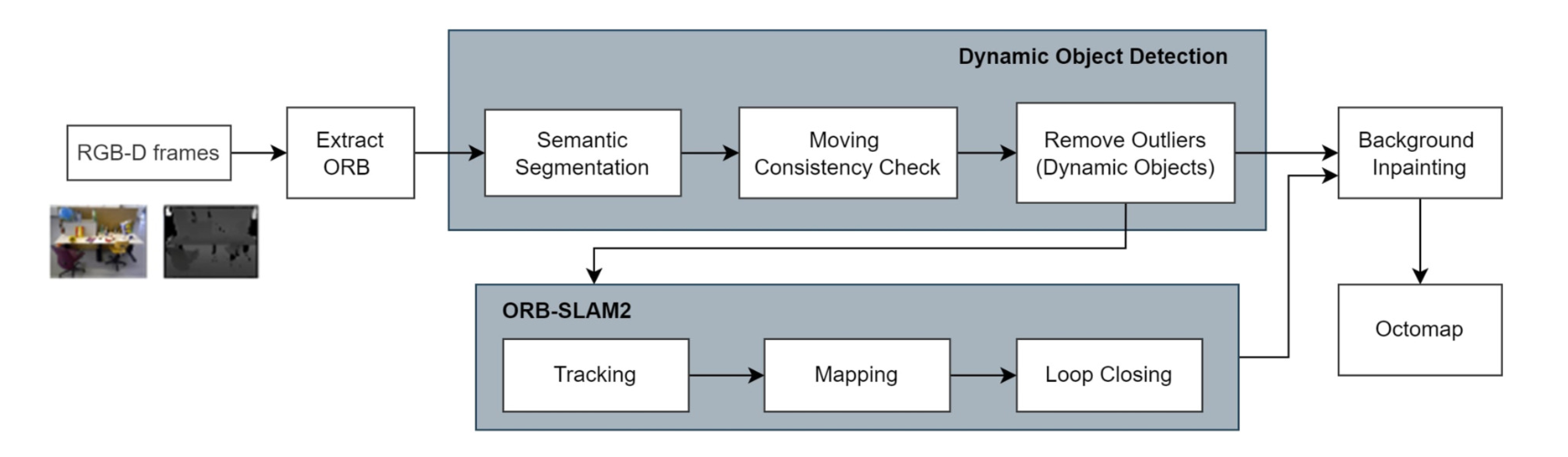

Visual Simultaneous Localization and Mapping (visual SLAM or vSLAM) enables robots to navigate and perform complex tasks in an unfamiliar environment. Most visual SLAM techniques can operate effectively under a static environment where objects are stationary. However, in practical applications, the environment often consists of moving objects and is dynamic. Visual SLAM methods designed for the static environment do not perform well in the dynamic one. In this paper, we propose an additional process to enhance the performance of visual SLAM for a dynamic environment. Our proposed visual SLAM system for dynamic circumstances, based on ORB-SLAM2, combines the capabilities of dynamic object detection and background inpainting to reduce the effect of dynamic objects. The system can detect moving objects using both semantic segmentation and LK optical flow with the epipolar constraint method, and the localization accuracy can be improved in dynamic scenarios. Having a specific scene map allows inpainting the obscured background from such dynamic objects utilizing static information that occurs at previous views. Eventually, a semantic octomap is built, which could be applied for navigation and high-level tasks. The experiment was carried out on the TUM RGB-D dataset and real-world environment and implemented on Robot Operating System (ROS). The experimental results show that the Absolute Trajectory Error (ATE) reduce up to 98.03% compared with standard visual SLAM baselines. It can fully demonstrate that the proposed object detection process can detect movable objects and reduce the impact of dynamic objects in visual SLAM.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

H. Durrant-Whyte and T. Bailey, “Simultaneous localization and mapping: part i,” IEEE Robotics Automation Magazine, vol. 13, no. 2, pp. 99–110, 2006.

X. Niu, C. Zhang, S. Fu, and W. Zhang, “Research on the development of 3d laser slam technology,” in 2021 IEEE 4th International Conference on Big Data and Artificial Intelligence (BDAI), pp. 181–185, 2021.

J. Fuentes-Pacheco, J. R. Ascencio, and J. M. Rendon-Mancha, “Visual simultaneous localization and mapping: a survey,” Artificial Intelligence Review, vol. 43, pp. 55–81, 2012.

H. Jo, S. Jo, H. M. Cho, and E. Kim, “Efficient 3d mapping with rgb-d camera based on distance dependent update,” in 2016 16th International Conference on Control, Automation and Systems (ICCAS), pp. 873–875, 2016.

G. Klein and D. Murray, “Parallel tracking and mapping for small ar workspaces,” in 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, pp. 225–234, 2007.

R. Mur-Artal, J. M. M. Montiel, and J. D. Tard ́os, “Orb-slam: A versatile and accurate monocular slam system,” IEEE Transactions on Robotics, vol. 31, no. 5, pp. 1147–1163, 2015.

R. Mur-Artal and J. D. Tard ́os, “Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras,” IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

J. Cheng, Y. Sun, and M. Q.-H. Meng, “A dense semantic mapping system based on crf-rnn network,” in 2017 18th International Conference on Advanced Robotics (ICAR), pp. 589–594, 2017.

Y. Sun, W. Zuo, and M. Liu, “Rtfnet: Rgbthermal fusion network for semantic segmentation of urban scenes,” IEEE Robotics and Automation Letters, vol. 4, no. 3, pp. 2576–2583, 2019.

C. Zhao, L. Sun, and R. Stolkin, “A fully end-toend deep learning approach for real-time simultaneous 3d reconstruction and material recognition,” in 2017 18th International Conference on Advanced Robotics (ICAR), pp. 75–82, 2017.

B. Bescos, J. M. Fa ́cil, J. Civera, and J. Neira, “Dynaslam: Tracking, mapping, and inpainting in dynamic scenes,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 4076–4083, 2018.

C. Yu, Z. Liu, X.-J. Liu, F. Xie, Y. Yang, Q. Wei, and Q. Fei, “Ds-slam: A semantic visual slam towards dynamic environments,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1168–1174, 2018.

T. Ran, L. Yuan, J. Zhang, D. Tang, and L. He, “Rs-slam: A robust semantic slam in dynamic environments based on rgb-d sensor,” IEEE Sensors Journal, vol. 21, no. 18, pp. 20657–20664, 2021.

M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler and A. Ng, “Ros: an open-source robot operating system,” IEEE International Conference on Robotics and Automation, vol. 3, 01 2009.

B. Lucas and T. Kanade, “An iterative image registration technique with an application to stereo vision,” Proceedings of the 7th international joint conference on Artificial intelligencevol (IJCAI’81), vol. 2, pp. 674-679, 1981.

K. Hata and S. Savarese, “Epipolar Geometry,” Stanford-CS231A, p. 14, 2019.

H. Zhao, X. Qi, X. Shen, J. Shi, and J. Jia, “Icnet for real-time semantic segmentation on highresolution images,” Computer Vision – ECCV 2018, pp. 418-434, 2018.

W. Hess, D. Kohler, H. Rapp, and D. Andor, “Real-time loop closure in 2d lidar slam,” in 2016 IEEE International Conference on Robotics and Automation (ICRA), pp. 1271–1278, 2016.

A. Tourani, H. Bavle, J. L. Sanchez-Lopez, and H. Voos, “Visual slam: What are the current trends and what to expect?,” Sensors, vol. 22, no. 23, 2022.

J. Engel, T. Sch ̈oeps, and D. Cremers, “Lsdslam: large-scale direct monocular slam,” European Conference on Computer Vision, vol. 8690, pp. 1–16, 09 2014.

K. Y. Kok and P. Rajendran, “A review on stereo vision algorithm: Challenges and solutions,” ECTI Transactions on Computer and Information Technology (ECTI-CIT), vol. 13, p. 112–128, Aug. 2019.

K. E. Ozden, K. Schindler, and L. Van Gool, “Multibody structure-from-motion in practice,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 32, no. 6, pp. 1134–1141, 2010.

A. Kundu, K. M. Krishna, and C. V. Jawahar, “Realtime motion segmentation based multibody visual slam,” ICVGIP ’10, (New York, NY, USA), p. 251–258, Association for Computing Machinery, 2010.

D. Zou and P. Tan, “Coslam: Collaborative visual slam in dynamic environments,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 2, pp. 354–366, 2013.

S. Li and D. Lee, “Rgb-d slam in dynamic environments using static point weighting,” IEEE Robotics and Automation Letters, vol. 2, no. 4, pp. 2263–2270, 2017.

Y. Sun, M. Liu, and M. Q.-H. Meng, “Improving rgb-d slam in dynamic environments: A motion removal approach,” Robotics and Autonomous Systems, vol. 89, pp. 110–122, 2017.

J. Cheng, Y. Sun, and M. Q.-H. Meng, “Improving monocular visual slam in dynamic environments: an optical-flow-based approach,” Advanced Robotics, vol.33, no. 12, pp. 576–589,2019.

R. Wang, W. Wan, Y. Wang, and K. Di, “A new rgb-d slam method with moving object detection for dynamic indoor scenes,” Remote Sensing, vol. 11, no. 10, 2019.

K. He, G. Gkioxari, P. Doll ́ar, and R. Girshick, “Mask r-cnn,” 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp. 2980-2988, 2017.

X. Long, W. Zhang, and B. Zhao, “Pspnet-slam: A semantic slam detect dynamic object by pyramid scene parsing network,” IEEE Access, vol. 8, pp. 214685–214695, 2020.

H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (Los Alamitos, CA, USA), pp. 6230–6239, IEEE Computer Society, jul 2017.

V. Badrinarayanan, A. Kendall, and R. Cipolla, “Segnet: A deep convolutional encoder-decoder architecture for image segmentation,” 2015. arXiv:1511.00561. [Online]. Available: https://doi.org/10.48550/arXiv.1511.00561

J. Cheng, Z. Wang, H. Zhou, L. Li, and J. Yao, “Dm-slam: A feature-based slam system for rigid dynamic scenes,” ISPRS International Journal of Geo-Information, vol. 9, no. 4, 2020.

L. Cui and C. Ma, “Sdf-slam: Semantic depth filter slam for dynamic environments,” IEEE Access, vol. 8, pp. 95301–95311, 2020.

C. Zhang, T. Huang, R. Zhang, and X. Yi, “Pldslam: A new rgb-d slam method with point and line features for indoor dynamic scene,” ISPRS International Journal of Geo-Information, vol. 10, p. 163, 03 2021.

M. Everingham, L. V. Gool, C. K. I. Williams, J. M. Winn, and A. Zisserman, “The pascal visual object classes (voc) challenge,” International Journal of Computer Vision, vol. 88, no. 2, pp. 303–338, 2010.

Q.-T. Luong and O. Faugeras, “The fundamental matrix: Theory, algorithms, and stability analysis,” International Journal of Computer Vision, vol. 17, pp. 43–75, 01 1996.

M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Commun. ACM, vol. 24, p. 381–395, jun 1981.

A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “Octomap: An efficient probabilistic 3d mapping framework based on octrees,” Autonomous Robots, vol. 34, pp. 189-206, 2013.

J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of rgb-d slam systems,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 573–580, 2012.