A Multi-Grained Attention Residual Network for Image Classification

Main Article Content

Abstract

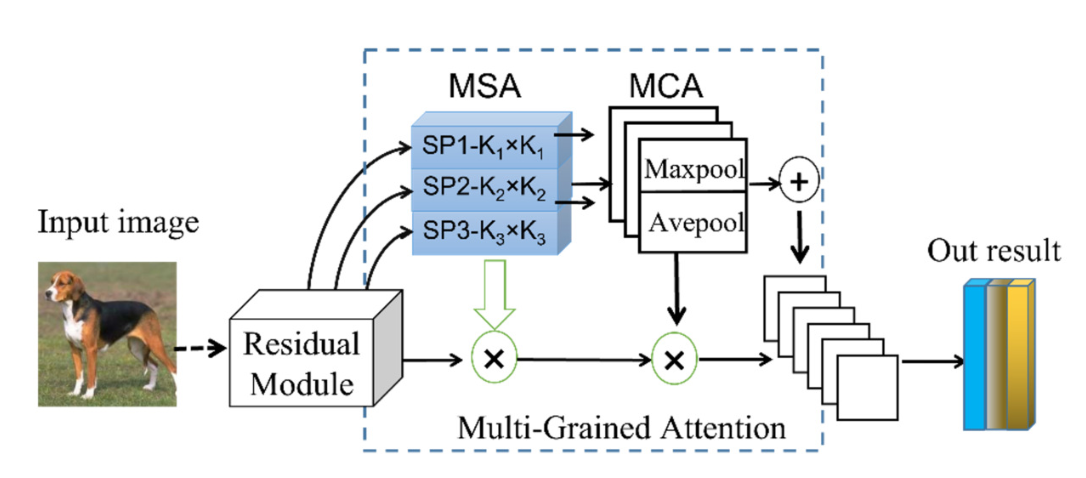

Attention mechanisms in deep learning can focus on critical features and ignore irrelevant details in the target task. This paper proposes a new multi-grained attention model (MGAN) to extract parts from images. The model includes a multi-grain spatial attention (MSA) mechanism and a multi-grain channel attention (MCA) mechanism. We use different convolutional branches and pooling layers to focus on the crucial information in the sample feature space and extract richer multi-grain features from the image. The model uses ResNet and Res2Net as the backbone networks to implement the image classification task. Experiments on the CIFAR10/100 and Mini-Imagenet datasets show that the proposed model MGAN can better focus on the critical information in the sample feature space, extract richer multi-grain features from the images, and significantly improve the image classification accuracy of the network.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Cheng, Bowen, et al. "Revisiting rcnn: On awakening the classification power of faster rcnn." Proceedings of the European Conference on computer vision (ECCV). 2018.

Wang, Chien-Yao, Alexey Bochkovskiy, and Hong-Yuan Mark Liao. "YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors." arXiv preprint arXiv:2207.02696 (2022).

Mekruksavanich, Sakorn, and Anuchit Jitpattanakul. "FallNeXt: A Deep Residual Model based on Multi-Branch Aggregation for Sensor-based Fall Detection." ECTI Transactions on Computer and Information Technology (ECTI-CIT) 16.4, pp.352-364, 2022.

Ahmed, Imran, and Gwanggil Jeon. "A real-time person tracking system based on SiamMask network for intelligent video surveillance." Journal of Real-Time Image Processing 18, pp.1803-1814,2021.

Kabir, HM Dipu, et al. "Spinalnet: Deep neural network with gradual input." IEEE Transactions on Artificial Intelligence (2022).

Han, Kai, et al. "Transformer in transformer."Advances in Neural Information Processing Systems 34, pp.15908-15919, 2021.

Yan, Qingsen, et al. "A lightweight network for high dynamic range imaging." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

Iandola, Forrest N., et al. "SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size." arXiv preprint arXiv:1602.07360 (2016).

Zeiler, Matthew D., and Rob Fergus. "Visualizing and understanding convolutional networks." Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 13. Springer International Publishing, 2014.

Szegedy, Christian, et al. "Going deeper with convolutions." Proceedings of the IEEE conference on computer vision and pattern recognition. 2015.

Lin, Tsung-Yi, et al. "Feature pyramid networks for object detection." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

Wang, Xiang, et al. "Towards multi-grained explainability for graph neural networks." Advances in Neural Information Processing Systems 34, pp.18446-18458, 2021.

Li, Yanghao, et al. "Mvitv2: Improved multi-scale vision transformers for classification and detection." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

Zhu, Yousong, et al. "Attention CoupleNet: Fully convolutional attention coupling network for object detection." IEEE Transactions on Image Processing 28.1, pp.113-126,2018.

Zhang, Jianfu, Li Niu, and Liqing Zhang. "Person re-identification with reinforced attribute attention selection." IEEE Transactions on Image Processing 30, pp.603-616, 2020.

H. Zheng, J. Fu, T. Mei, and J. Luo, ''Learning multi-attention convolutional neural network for fine-grained image recognition,'' in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Oct. 2017, pp. 5209-5217.

Li, Weijiang, et al. "Bidirectional LSTM with self-attention mechanism and multi-channel features for sentiment classification." Neurocomputing 387, pp. 63-77, 2020.

WANG Q, WU B, Zhu P, et al. "ECA-Net: Efficient channel attention for deep convolutional neural Networks. " IEEE/CVF conference on computer vision and pattern recognition (CVPR), Seattle, WA, USA, pp. 11531-11539,2020.

Li, Xiang, et al. "Selective kernel networks." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 510-519,2019.

Hu, Yanting, et al. "Channel-wise and spatial feature modulation network for single image super-resolution." IEEE Transactions on Circuits and Systems for Video Technology,30.11, pp. 3911-3927, 2019.

Dosovitskiy, Alexey, et al. "An image is worth 16x16 words: Transformers for image recognition at scale." arXiv preprint arXiv:2010.11929 (2020).

Han K, Xiao A, Wu E, et al. "Transformer in transformer". Advances in Neural Information Processing Systems, 34, pp.15908-15919, 2021.

He K, Zhang X, Ren S, et al. " Deep residual learning for image recognition." Proceedings of the IEEE Conference on computer vision and pattern recognition, pp.770-778, 2016.

Gao, Shang-Hua, et al. "Res2net: a new multi-scale backbone architecture." IEEE Transactions on pattern analysis and machine intelligence 43.2, pp.652-662,2019.

Borji A, Itti L. "State-of-the-art in visual attention modelling. " IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1), pp.185-207,2013.

Li, Zuchao, et al. "Seq2seq dependency parsing." Proceedings of the 27th International Conference on Computational Linguistics. 2018.

Li, Jian, et al. "Multi-head attention with disagreement regularization." arXiv preprint arXiv:1810.10183 (2018).

Wang, Fei, et al. "Residual attention network for image classification." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

Krizhevsky, Alex, and Geoffrey Hinton. "Learning multiple layers of features from tiny images." (2009): 7.

Zagoruyko S, Komodakis N. "Learning to compare image patches via convolutional neural networks. "Proceedings of the IEEE Conference on computer vision and pattern recognition, pp.4353-4361,2015.

Hu, Jie, Li Shen, and Gang Sun. "Squeeze-and-excitation networks."Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

Woo, Sanghyun, et al. "Cbam: Convolutional block attention module." Proceedings of the European conference on computer vision (ECCV). 2018.

Yang, Lingxiao, et al. "Simam: A simple, parameter-free attention module for convolutional neural networks." International conference on PMLR, 2021.

Wang, Qilong, et al. "ECA-Net: Efficient channel attention for deep convolutional neural networks." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition,pp. 11534-11542,2020.

Sagar, Abhinav. "Dmsanet: Dual multi scale attention network." Image Analysis and Processing–ICIAP 2022: 21st International Conference, Lecce, Italy, May 23–27, 2022, Proceedings,Part I. Cham: Springer International Publishing, pp. 633-6452022.

Ding, Xiaohan, et al. "Scaling up your kernels to 31x31: Revisiting large kernel design in cnns." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022.

Zagoruyko, Sergey, and Nikos Komodakis. "Wide residual networks." arXiv preprint arXiv:1605.07146, 2016.7.