Machine Reading Comprehension Using Multi-Passage BERT with Dice Loss on Thai Corpus

Main Article Content

Abstract

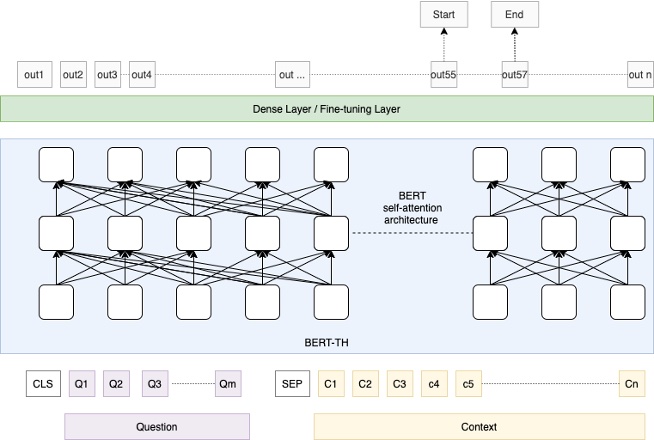

Nowadays there is an advancement in the field of machine reading comprehension task (MRC) due to the invention of large scale pre-trained language models, such as BERT. However, the performance is still limited when the context is long and contains many passages. BERT can only embed a part of the whole passage equal to the input size; thus, sliding windows must be used which leads to discontinued information when the passage is long. In this paper, we aim to propose a BERT-based MRC framework tailored for a long passage context in the Thai corpus. Our framework employs the multi-passage BERT along with self-adjusting dice loss, which can help the model focuses more on the answer region of the context passage. We also show that there is an improvement in the performance when an auxiliary task is used. The experiment was conducted on the Thai Question Answering (QA) dataset used in Thailand National Software Competition. The results show that our method improves the model’s performance over a traditional BERT framework.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Rajpurkar, P., Zhang, J., Lopyrev, K., and Liang, P., 2016. SQuAD: 100, 000+ Questions for Machine Comprehension of Text. In EMNLP.

Kwiatkowski, T., Palomaki, J., Redfield, O., Collins, M., Parikh, A., Alberti, C., Epstein, D., Polosukhin, I., Devlin, J., Lee, K., Toutanova, K., Jones, L., Kelcey, M., Chang, M.-W., Dai, A.M., Uszkoreit, J., Le, Q., and Petrov, S., 2019. Natural Questions: A Benchmark for Question Answering Research. Transactions of the Association for Computational Linguistics 7, 453-466.

Joshi, M., Choi, E., Weld, D., and Zettlemoyer, L., 2017. TriviaQA: A Large Scale Distantly Supervised Challenge Dataset for Reading Comprehension. Association for Computational Linguistics Vancouver, Canada.

Dunn, M., Sagun, L., Higgins, M., G¸ney, V.U., Cirik, V., and Cho, K., 2017. SearchQA: A New Q&A Dataset Augmented with Context from a Search Engine. ArXiv abs/1704.05179.

He, W., Liu, K., Liu, J., Lyu, Y., Zhao, S., Xiao, X., Liu, Y., Wang, Y., Wu, H., She, Q., Liu, X., Wu, T., and Wang, H., 2018. DuReader: a Chinese Machine Reading Comprehension Dataset from Real-world Applications. Association for Computational Linguistics, Melbourne, Australia

Trakultaweekoon, K., Thaiprayoon, S., Palingoon, P., and Rugchatjaroen, A., 2019. The First Wikipedia Questions and Factoid Answers Corpus in the Thai Language. 2019 14th International Joint Symposium on Artificial Intelligence and Natural Language Processing (iSAI-NLP), 1-4.

Seo, M.J., Kembhavi, A., Farhadi, A., and Hajishirzi, H., 2016. Bidirectional Attention Flow for Machine Comprehension. ArXiv abs/1611.01603.

Huang, H.-Y., Zhu, C., Shen, Y., and Chen, W., 2017. FusionNet: Fusing via Fully-Aware Attention with Application to Machine Comprehension. In arXiv e-prints.

Devlin, J., Chang, M.-W., Lee, K., and Toutanova, K., 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding Association for Computational Linguistics, Minneapolis, Minnesota, 4171-4186.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., and Polosukhin, I., 2017. Attention is All you Need. In NIPS.

Wang, Z., Ng, P., Ma, X., Nallapati, R., and Xiang, B., 2019. Multi-passage BERT: A Globally Normalized BERT Model for Open-domain Question Answering. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) Association for Computational Linguistics, Hong Kong, China, 5878-5882.

Li, X., Sun, X., Meng, Y., Liang, J., Wu, F., and Li, J., 2020. Dice Loss for Data-imbalanced NLP Tasks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics Association for Computational Linguistics, Online, 465-476.

Back, S., Yu, S., Indurthi, S.R., Kim, J., and Choo, J., 2018. MemoReader: Large-Scale Reading Comprehension through Neural Memory Controller. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing Association for Computational Linguistics, Brussels, Belgium, 2131-2140.

Wang, Y., Liu, K., Liu, J., He, W., Lyu, Y., Wu, H., Li, S., and Wang, H., 2018. Multi-Passage Machine Reading Comprehension with Cross-Passage Answer Verification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) Association for Computational Linguistics, Melbourne, Australia, 1918-1927.

Wang, S., Yu, M., Guo, X., Wang, Z., Klinger, T., Zhang, W., Chang, S., Tesauro, G., Zhou, B., and Jiang, J., 2017. R3: Reinforced Reader-Ranker for Open-Domain Question Answering. ArXiv abs/1709.00023.

Pang, L., Lan, Y., Guo, J., Xu, J., Su, L., and Cheng, X., 2019. HAS-QA: Hierarchical Answer

Spans Model for Open-domain Question Answering. In AAAI.

Jitkrittum, W., Haruechaiyasak, C., and Theeramunkong, T., 2009. QAST: Question Answering System for Thai Wikipedia (08/06).

Kongthon, A., Kongyoung, S., Haruechaiyasak, C., and Palingoon, P., 2011. A Semantic Based Question Answering System for Thailand Tourism Information. In Proceedings of the KRAQ11 workshop Asian Federation of Natural Language Processing, Chiang Mai, 38-42.

Noraset, T., Lowphansirikul, L., and Tuarob, S., 2021. WabiQA: A Wikipedia-Based Thai Question-Answering System. Inf. Process. Manag. 58, 102431.

Hochreiter, S., #252, and Schmidhuber, r., 1997. Long Short-Term Memory. Neural Comput. 9, 8, 1735-1780.

Ba, J., Kiros, J.R., and Hinton, G.E., 2016. Layer Normalization. ArXiv abs/1607.06450.

He, K., Zhang, X., Ren, S., and Sun, J., 2016. Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770-778.

Doshi, K., 2020. Transformers Explained Visually (Part 1): Overview of Functionality. In

Clark, C. and Gardner, M., 2018. Simple and Effective Multi-Paragraph Reading Comprehension. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) Association for Computational Linguistics, Melbourne, Australia, 845-855.

Pappagari, R., Villalba, J.s., Carmiel, Y., and Dehak, N., 2019. Hierarchical Transformers for Long Document Classification. 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 838-844.

Phatthiyaphaibun, W., Chaovavanich, K., Polpanumas, C., Suriyawongkul, A., Lowphansirikul, L., and Chormai, P., 2016. PyThaiNLP: Thai Natural Language Processing in Python.

Kingma, D.P. and Ba, J., 2015. Adam: A Method for Stochastic Optimization. CoRR abs/1412.6980.