Interpretation of Spatial Relationships by Objects Tracking in a Complex Streaming Video

Main Article Content

Abstract

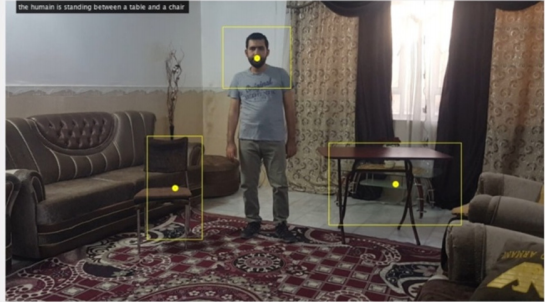

By interpreting spatial relations among objects, many applications such as video surveillance, robotics, and scene understanding systems can be utilized efficiently for different purposes. The vast majority of known models for spatial relationships are carried out with an image. However, due to the advance in technology, a three-dimensional scene became available. For our knowledge, most of the interpreted spatial relations were defined between silent objects in images. A technique for determining the dynamic spatial relation between a moving object and another silent one in a time varying scene is presented here. The spatial relationships were determined by using motion-based object tracking along with hypergraph object-oriented model. Defining the spatial relationship types between a single silent object and a moving human body has applied based on two strategies; determining each object with a bounding box, then comparing the locations of these boxes by applying certain conditional rules. This study identifies some of the spatial relationships in three dimensions of streaming frames, which has carried out by establishing a highly accurate and efficient proposed algorithm. The following relations have been studied; (“direct in front of”, “in front of on the Right/Left”, “direct behind of”, “behind of on the Right/Left”, “to the Right”, “to the Left”, “On”, “Under”, Besides, and “Besides on to the Right/Left”). The experimental results, which have been obtained based on actual indoor streaming frames, show effectiveness and reliable execution of our system

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Jaworski, T., Kucharski, J.: The use of fuzzy logic for description of spatial relations between objects. Autom. / Akad. Górniczo-Hutnicza im. Stanisława Staszica w Krakowie. T. 14, , 563–580 (2010)

Condé, B.C., Fuentes, S., Caron, M., Xiao, D., Collmann, R., Howell, K.S.: Development of a robotic and computer vision method to assess foam quality in sparkling wines. Food Control. 71, 383–392 (2017). doi:10.1016/j.foodcont.2016.07.020

Ganea, E., Brezovan, M.: An hypergraph object oriented model for image segmentation and annotation. In: Proceedings of the International Multiconference on Computer Science and Information Technology,Wisla. pp. 695–701 (2010)

Ganea, E., Brezovan, M.: Image indexing by spatial relationships between salient objects. In: 2011 Federated Conference on Computer Science and Information Systems, FedCSIS 2011. pp. 699–704. , Szczecin, Poland (2011)

Qiu, J., Wu, Q., Ding, G., Xu, Y., Feng, S.: A survey of machine learning for big data processing. EURASIP J. Adv. Signal Process. 2016, (2016). doi:10.1186/s13634-016-0355-x

Rudin, C., Wagstaff, K.L.: Machine learning for science and society. Mach. Learn. 95, 1–9 (2013). doi:10.1007/s10994-013-5425-9

Tango, F., Botta, M.: Real-time detection system of driver distraction using machine learning. IEEE Trans. Intell. Transp. Syst. 14, 894–905 (2013). doi:10.1109/TITS.2013.2247760

Pedersen, J.: SURF : Feature detection & description. (2011)

Mönestam, E., Behndig, A.: Impact on visual function from light scattering and glistenings in intraocular lenses, a long-term study. Acta Ophthalmol. 89, 724–728 (2011). doi:10.1111/j.1755-3768.2009.01833.x

Ke, Y., Sukthankar, R.: PCA-SIFT: A more distinctive representation for local image descriptors. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp. 1–8 (2004)

Mikolajczyk, K., Schmid, C.: A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1615–1630 (2005). doi:10.1109/TPAMI.2005.188

Bay, H., Tuytelaars, T., Van Gool, L.: SURF: Speeded up robust features. In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). pp. 404–417 (2006)

Shen, L., Bai, L.: Face Detection in Grey Images Using Orientation Matching. In: Proceedings 17th European Simulation Multiconference. pp. 2–7 (2003)

Karmakar, D., Murthy, C.A.: Face recognition using face-autocropping and facial feature points extraction. ACM Int. Conf. Proceeding Ser. 26–27–Febr, 116–122 (2015). doi:10.1145/2708463.2709056

Dhivakar, B., Sridevi, C., Selvakumar, S., Guhan, P.: Face detection and recognition using skin color. 2015 3rd Int. Conf. Signal Process. Commun. Networking, ICSCN 2015. 3–9 (2015). doi:10.1109/ICSCN.2015.7219848

Viola, P., Jones, M.J.: Robust Real-Time Face Detection. Int. J. Comput. Vis. 57, 137–154 (2004). doi:10.1023/B:VISI.0000013087.49260.fb

Jia, H., Zhang, Y., Wang, W., Xu, J.: Accelerating Viola-Jones facce detection algorithm on GPUs. Proc. 14th IEEE Int. Conf. High Perform. Comput. Commun. HPCC-2012 - 9th IEEE Int. Conf. Embed. Softw. Syst. ICESS-2012. 396–403 (2012). doi:10.1109/HPCC.2012.60

Sun, B., Chen, S., Wang, J., Chen, H.: A robust multi-class AdaBoost algorithm for mislabeled noisy data. Knowledge-Based Syst. 102, 87–102 (2016). doi:10.1016/j.knosys.2016.03.024

Li, C., Qi, Z., Jia, N., Wu, J.: Human face detection algorithm via Haar cascade classifier combined with three additional classifiers. ICEMI 2017 - Proc. IEEE 13th Int. Conf. Electron. Meas. Instruments. 2018–Janua, 483–487 (2017). doi:10.1109/ICEMI.2017.8265863

Froba, B., Kublbeck, C.: Real-Time Face Detection Using Edge-Orientation Matching. 3rd International Conference on Audio and Video Based Biometric Person Authentication, Sweden (2001)

Bai, L., Shen, L.: Face Detection by Orientation Map Matching. In: International Conference on Computational Intelligence for Modelling Control and Automation. pp. 1–7. , Austria (2003)

Ge, Q., Shao, T., Wen, C., Sun, R.: Analysis on strong tracking filtering for linear dynamic systems. Math. Probl. Eng. 2015, (2015). doi:10.1155/2015/648125

Nakabayashi, A., Ueno, G.: An extension of the ensemble kalman filter for estimating the observation error covariance matrix based on the variational Bayes’s method. Mon. Weather Rev. 145, 199–213 (2017). doi:10.1175/MWR-D-16-0139.1

Ogundile, O.O., Usman, A.M., Versfeld, D.J.J.: An empirical mode decomposition based hidden Markov model approach for detection of Bryde’s whale pulse calls. J. Acoust. Soc. Am. 147, EL125-EL131 (2020). doi:10.1121/10.0000717

Dias, J., Jorge, P.M.: People tracking with multi-camera system. ACM Int. Conf. Proceeding Ser. 08-11-Sep-, 181–186 (2015). doi:10.1145/2789116.2789141

Vigus, S.A., Bull, D.R., Canagarajah, C.N.: Video object tracking using region split and merge and a Kalman filter tracking algorithm. Image Process. 2001. Proceedings. 2001 Int. Conf. 1, 650–653 vol.1 (2001). doi:10.1109/ICIP.2001.959129

Medioni, G., Cohen, I., Brémond, F., Hongeng, S., Nevatia, R.: Event detection and analysis from video streams. IEEE Trans. Pattern Anal. Mach. Intell. 23, 873–889 (2001). doi:10.1109/34.946990

Li, S.E., Li, G., Yu, J., Liu, C., Cheng, B., Wang, J., Li, K.: Kalman filter-based tracking of moving objects using linear ultrasonic sensor array for road vehicles. Mech. Syst. Signal Process. 98, 173–189 (2018). doi:10.1016/j.ymssp.2017.04.041

Freeman, J.: The modelling of spatial relations. Comput. Graph. Image Process. 4, 156–171 (1975). doi:10.1016/S0146-664X(75)80007-4

Yan, H., Chu, Y., Li, Z., Guo, R.: A quantitative description model for direction relations based on direction groups. Geoinformatica. 10, 177–196 (2006). doi:10.1007/s10707-006-7578-1

Krishnapuram, R., Keller, J.M., Ma, Y.: Quantitative Analysis of Properties and Spatial Relations of Fuzzy Image Regions. IEEE Trans. Fuzzy Syst. 1, 222–233 (1993). doi:10.1109/91.236554

Hoàng, N.V., Gouet-Brunet, V., Rukoz, M., Manouvrier, M.: Embedding spatial information into image content description for scene retrieval. Pattern Recognit. 43, 3013–3024 (2010). doi:10.1016/j.patcog.2010.03.024

Oria, V., Ozsu, M.T., Iglinski, P.J., Xu, B., Cheng, L.I.: DISIMA: An object-oriented approach to developing an image database system. Proc. - Int. Conf. Data Eng. 672–673 (2000). doi:10.1109/icde.2000.839489

Bloch, I.: Fuzzy spatial relationships for image processing and interpretation: A review. Image Vis. Comput. 23, 89–110 (2005). doi:10.1016/j.imavis.2004.06.013

Guadarrama, S., Riano, L., Golland, D., Gouhring, D., Jia, Y., Klein, D., Abbeel, P., Darrell, T.: Grounding spatial relations for human-robot interaction. IEEE Int. Conf. Intell. Robot. Syst. 1640–1647 (2013). doi:10.1109/IROS.2013.6696569

Jouili, S., Tabbone, S.: Hypergraph-based image retrieval for graph-based representation. Pattern Recognit. 45, 4054–4068 (2012). doi:10.1016/j.patcog.2012.04.016

Yilmaz, A., Javed, O., Shah, M.: Object tracking: A survey. ACM Comput. Surv. 38, 1–45 (2006). doi:10.1145/1177352.1177355

Haines, T.S.F., Xiang, T.: Background subtraction with dirichletprocess mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 36, 670–683 (2014). doi:10.1109/TPAMI.2013.239

Li, X., Wang, K., Wang, W., Li, Y.: A multiple object tracking method using Kalman filter. In: 2010 IEEE International Conference on Information and Automation, ICIA 2010. pp. 1862–1866 (2010)

Muthugnanambika, M., Padmavathi, S.: Feature detection for color images using SURF. 2017 4th Int. Conf. Adv. Comput. Commun. Syst. ICACCS 2017. 4–7 (2017). doi:10.1109/ICACCS.2017.8014572

The MathWorks Libraries, Available from: < https://www.mathworks.com/discovery/face-recognition.html>

The MathWorks Libraries, Available from: < https://www.mathworks.com/help/vision/ref/detectsurffeatures.html>