A Comparison of vision-based and CNN-based detector for fish monitoring in complex environment

Main Article Content

Abstract

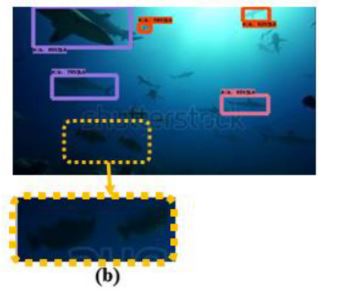

Aquaculture farming can help soften the environmental impact of overfishing by fulfilling seafood demands with farmed fishes. However, to maintain big scale farms can be challenging, even with the help of underwater cameras affixed in farm cages, because there are hours’ worth of footages to sift through, which can be a laborious task if performed manually. Vision-based system therefore could be deployed to filter useful information from video footages, automatically. This work proposed to solve the above mentioned problems by deploying the; 1) Extended UTAR Aquaculture Farm Fish Monitoring System Framework (UFFMS), being the handcrafted method, and 2) Faster Region Convolutional Neural Network (Faster RCNN), being the CNN-based method, for fish detection. These two methods could extract information about fishes from video footages. Experimental results show that for well-lit footages, Faster RCNN performs better, compared to the extended-UFFMS. However, accuracy of Faster RCNN drops drastically for non-well-lit footages, at an average of 28.57%, despite still having perfect precision scores.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Liang, C., & Pauly, D. (2017). Fisheries impacts on Chinas coastal ecosystems: Unmasking a pervasive ‘fishing down’ effect. Plos One, 12(3). doi: 10.1371/journal.pone.0173296.

Appendix 2: Aquaculture regulatory framework. (n.d.). Retrieved from https://www.mfe.govt.nz/publications/marine/aquaculture-risk-management-options/appendix-2-aquaculture-regulatory-framework

A. Lukežič, T. Vojíř, L. C. Zajc, J. Matas, and M. Kristan: Discriminative Correlation Filter with Channel and Spatial Reliability. International Journal of Computer Vision, Volume 126, Issue 7, pp 671-688, July 2018

Tan, C. S., Lau, P. Y., Correia, P. L., & Campos, A. (2018). Automatic analysis of deep-water remotely operated vehicle footage for estimation of Norway lobster abundance. Frontiers of Information Technology & Electronic Engineering, 19(8), 1042–1055. doi: 10.1631/fitee.1700720

Tan, C. S., Lau, P. Y., Correia, P. L., & Fonseca, P. (2015). A Tracking Scheme for Norway Lobster and Burrow Abundance Estimation in Underwater Video Sequences, Joint Conference of IWAIT and IFMIA

Fier, R., Albu, A. B., & Hoeberechts, M. (2014). Automatic fish counting system for noisy deep-sea videos. 2014 Oceans - St. Johns. doi: 10.1109/oceans.2014.7003118

S. Villon, M. Chaumont, G. Subsol, S. Villéger, T. Claverie, and D. Mouillot, “Coral Reef Fish Detection and Recognition in Underwater Videos by Supervised Machine Learning: Comparison Between Deep Learning and HOG SVM Methods,” Advanced Concepts for Intelligent Vision Systems Lecture Notes in Computer Science, pp. 160–171, 2016.

C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015.

J. Jäger, E. Rodner, J. Denzler, V. Wolff, and K. Fricke-Neuderth, SeaCLEF 2016: Object Proposal Classification for Fish Detection in Underwater Videos. CLEF, 2016.

R. Mandal, R. M. Connolly, T. A. Schlacher, and B. Stantic, “Assessing fish abundance from underwater video using deep neural networks,” 2018 International Joint Conference on Neural Networks (IJCNN), 2018.

Y. J. Ling and P. Y. Lau: Fish monitoring in complex environment. Proc. SPIE 11049, International Workshop on Advanced Image Technology (IWAIT), 2019

“Grey Reef Sharks in Backlight Stock Footage Video (100% Royalty-free) 9555737,” Shutterstock. [Online]. Available: https://www.shutterstock.com /video/clip-9555737-grey-reef-sharks-. [Accessed: 13-Mar-2020].

“A Large Group of Fish Stock Footage Video (100% Royalty-free) 27605698,” Shutterstock. [Online]. Available: https://www.shutterstock.com/video/clip-27605698-large-group-fish-swim-red-sea-egypt. [Accessed: 13-Mar-2020].

“School Fish Blue Background - Stock Footage Video (100% Royalty-free) 17734258,” Shutterstock. [Online].

Hays, J. (2012, January). BLUEFIN TUNA FISH FARMING. Retrieved March 21, 2020, from http://factsanddetails.com/world/cat53/sub340/item2188.html

Sustainable development of agriculture and fisheries industries. (2013, December 2). Retrieved March 21, 2020, from https://www.ceo.gov.hk/archive/2017/eng/blog/blog20131118.html

R. Haddad and A. Akansu, “A class of fast Gaussian binomial filters for speech and image processing,” IEEE Transactions on Signal Processing, vol. 39, no. 3, pp. 723–727, 1991.

Canny, J. (1987). A Computational Approach to Edge Detection. Readings in Computer Vision, 184–203. doi: 10.1016/b978-0-08-051581-6.50024-6

R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation,” 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014.

Koen E. A. Van De Sande, J. R. R. Uijlings, T. Gevers, and A. W. M. Smeulders, “Segmentation as selective search for object recognition,” 2011 International Conference on Computer Vision, 2011.

R. Girshick, “Fast R-CNN,” 2015 IEEE International Conference on Computer Vision (ICCV), 2015.

Y. Ren, C. Zhu, and S. Xiao: Object Detection Based on Fast/Faster RCNN Employing Fully Convolutional Architectures. Mathematical Problems in Engineering, vol. 2018, Article ID 3598316, 7 pages, 2018

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” Computer Vision – ECCV 2016 Lecture Notes in Computer Science, pp. 21–37, 2016.

Gao, H. (2017, October 5). Faster R-CNN Explained. Retrieved from https://medium.com/@smallfishbigsea/faster-r-cnn-explained-864d4fb7e3f8

Kuznetsova, A., Rom, H., Alldrin, N., Uijlings, J., Krasin, I., Pont-Tuset, J., … Ferrari, V. (2020). The Open Images Dataset V4. International Journal of Computer Vision. doi: 10.1007/s11263-020-01316-z

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi: 10.1109/cvpr.2016.90

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533–536. doi: 10.1038/323533a0