Bimodal Emotion Recognition Using Deep Belief Network

Main Article Content

Abstract

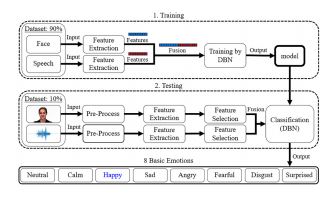

The emotions are very important in human daily life. In order to make the machine can recognize the human emotional state, and it can intelligently respond to need for human, which are very important in human-computer interaction. The majority of existing work concentrate on the classification of six basic emotions only. In this research work propose the emotion recognition system through the multimodal approach, which integrated information from both facial and speech expressions. The database has eight basic emotions (neutral, calm, happy, sad, angry, fearful, disgust, and surprised). Emotions are classified using deep belief network method. The experiment results show that the performance of bimodal emotion recognition system, it has better improvement. The overall accuracy rate is 97.92%.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

K. R. Scherer et al., “Psychological models of emotion,” The neuropsychology of emotion, vol. 137, no. 3, pp. 137-162, 2000.

Q. Li, Z. Yang, S. Liu, Z. Dai, and Y. Liu, “The study of emotion recognition from physiological signals,” in 2015 Seventh International Conference on Advanced Computational Intelligence (ICACI). IEEE, 2015, pp. 378-382.

R. W. Picard, Affective computing. MIT press, 2000.

A. A. Varghese, J. P. Cherian, and J. J. Kizhakkethottam, “Overview on emotion recognition system,” in 2015 International Conference on Soft-Computing and Networks Security (IC-SNS). IEEE, 2015, pp. 1-5.

M. S. Hossain and G. Muhammad, “Emotion recognition using deep learning approach from audio-visual emotional big data,” Information Fusion, vol. 49, pp. 69-78, 2019.

M. Mukeshimana, X. Ban, N. Karani, and R. Liu, “Multimodal emotion recognition for human-computer interaction: A survey,” System, vol. 9, p. 10.

S. Thushara and S. Veni, “A multimodal emotion recognition system from video,” in 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT). IEEE, 2016, pp. 1-5.

J. Yan, W. Zheng, Q. Xu, G. Lu, H. Li, and B. Wang, “Sparse kernel reduced-rank regression for bimodal emotion recognition from facial expression and speech,” IEEE Transactions on Multimedia, vol. 18, no. 7, pp. 1319-1329, 2016.

H. Miao, Y. Zhang, W. Li, H. Zhang, D. Wang, and S. Feng, “Chinese Multimodal Emotion Recognition in Deep and Traditional Machine Leaming Approaches,” in 2018 First Asian Conference on Affective Computing and Intelligent Interaction (ACII Asia). IEEE, 2018, pp. 1-6.

F. Xu and Z. Wang, “Emotion Recognition Research Based on Integration of Facial Expression and Voice,” in 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI). IEEE, 2018, pp. 1-6.

C. Busso, Z. Deng, S. Yildirim, M. Bulut, C. M. Lee, A. Kazemzadeh, S. Lee, U. Neumann, and S. Narayanan, “Analysis of emotion recognition using facial expressions, speech and multimodal information,” in Proceedings of the 6th international conference on Multimodal interfaces. ACM, 2004, pp. 205-211.

Y. Wang and L. Guan, “Recognizing human emotional state from audiovisual signals,” IEEE transactions on multimedia, vol. 10, no. 5, pp. 936-946, 2008.

D. Nguyen, K. Nguyen, S. Sridharan, A. Ghasemi, D. Dean, and C. Fookes, “Deep spatio-temporal features for multimodal emotion recognition,” in 2017 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, 2017, pp. 1215-1223.

K. P. Rao, M. V. P. C. S. Rao, and N. H. Chowdary, “An Integrated Approach to Emotion Recognition and Gender Classification,” Journal of Visual Communication and Image Representation, 2019.

S. R. Livingstone and F. A. Russo, “The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English,” PloS one, vol. 13, no. 5, p. e0196391, 2018.

D. E. King, “Dlib-ml: A machine learning toolkit,” Journal of Machine Learning Research, vol. 10, no. Jul, pp. 1755-1758, 2009.

T. Giannakopoulos, “pyaudioanalysis: An open-source python library for audio signal analysis,” PloS one, vol. 10, no. 12, p. e0144610, 2015.

S. Poria, E. Cambria, A. Hussain, and G.-B. Huang, “Towards an intelligent framework for multimodal affective data analysis,” Neural Networks, vol. 63, pp. 104-116, 2015.

G. E. Hinton, S. Osindero, and Y.-W. Teh, “A fast learning algorithm for deep belief nets,” Neural computation, vol. 18, no. 7, pp. 1527-1554, 2006.

A. Fischer and C. Igel, “Training restricted Boltzmann machines: An introduction,” Pattern Recognition, vol. 47, no. 1, pp. 25-39, 2014.

albertbup, “A python implementation of deep belief networks built upon numpy and tensorflow with scikit-learn compatibility,” 2017. [Online]. Available: https://github.com/albertbup/deep-belief-network.

G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” science, vol. 313, no. 5786, pp. 504-507, 2006.