Segmenting Snow Scene from CCTV using Deep Learning Approach

Main Article Content

Abstract

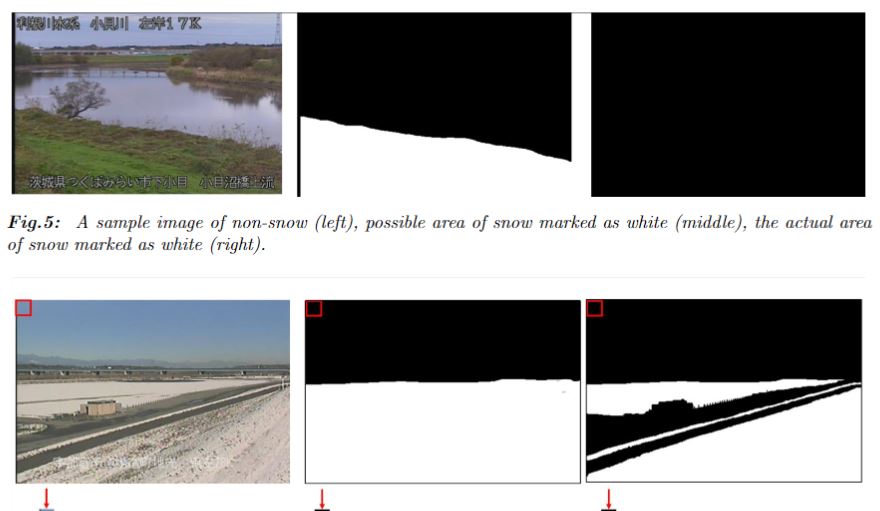

Recently, data from many sensors has been used in a disaster monitoring of things, such as river wa- ter levels, rainfall levels, and snowfall levels. These types of numeric data can be straightforwardly used in a further analysis. In contrast, data from CCTV cameras (i.e. images and/or videos) cannot be easily interpreted for users in an automatic way. In a tra- ditional way, it is only provided to users for a visual- ization without any meaningful interpretation. Users must rely on their own expertise and experience to interpret such visual information. Thus, this paper proposes the CNN-based method to automatically in- terpret images captured from CCTV cameras, by us- ing snow scene segmentation as a case example. The CNN models are trained to work on 3 classes: snow, non-snow and non-ground. The non-ground class is explicitly learned, in order to avoid a confusion of the models in differentiating snow pixels from non- ground pixels, e.g. sky regions. The VGG-19 with pre-trained weights is retrained using manually la- beled snow, non-snow and non-ground samples. The learned models achieve up to 85% sensitivity and 97% specificity of the snow area segmentation.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

M. Japan. (2019) Disaster prevention informa- tion of river. [Online]. Available: http://www.river.go.jp/kawabou/ipTopGaikyo.do

W. Wang, T. Liang, X. Huang, Q. Feng, H. Xie, X. Liu, M. Chen, and X. Wang, “Early warning of snow-caused disasters in pastoral areas on the tibetan plateau, Natural hazards and earth sys- tem sciences, vol. 13, no. 6, pp. 14111425, 2013.

W. Haeberli and C. Whiteman, “Snow and icere- lated hazards, risks, and disasters: a general framework,” in Snow and Ice-Related Hazards, Risks and Disasters. Elsevier, 2015, pp. 134.

J. A. Hern´andez-Nolasco, M. A. W. Ovando, F. D. Acosta, and P. Pancardo, “Water level meter for alerting population about floods,” in 2016 IEEE 30th International Conference on Advanced Information Networking and Applica- tions (AINA). IEEE, 2016, pp. 879884.

Z. S. M. Odli, T. N. T. Izhar, A. R. A. Razak, S. Y. Yusuf, I. A. Zakarya, F. N. M. Saad, and M. Z. M. Nor, “Development of portable wa- ter level sensor for flood management system,” ARPN Journal of Engineering and Applied Sci- ences, vol. 11, pp. 53525357, 2016.

E. Trono, M. Guico, N. Libatique, G. Tango- nan, D. Baluyot, T. Cordero, F. Geronimo, and A. Parrenas, “Rainfall monitoring using acous- tic sensors,” in TENCON 2012 IEEE Region 10 Conference. IEEE, 2012, pp. 16.

R. Stumptner, C. Lettner, and B. Freudenthaler, “Combining relational and nosql database sys- tems for processing sensor data in disaster man- agement,” in International Conference on Com- puter Aided Systems Theory. Springer, 2015, pp. 663670.

J. Abdelaziza, M. Addab, and H. Mcheicka, “An architectural model for fog computing,” Journal of Ubiquitous Systems and Pervasive Networks, vol. 10, no. 1, pp. 2125, 2018.

J. Bossu, N. Hauti`ere, and J.-P. Tarel, “Rain or snow detection in image sequences through use of a histogram of orientation of streaks,” Inter- national journal of computer vision, vol. 93, no. 3, pp. 348367, 2011.

S. Ozkan, M. Efendioglu, and C. Demirpolat, “Cloud detection from rgb color remote sens- ing images with deep pyramid networks,” in IGARSS 2018-2018 IEEE International Geo- science and Remote Sensing Symposium. IEEE, 2018, pp. 69396942.

Y.-F. Liu, D.-W. Jaw, S.-C. Huang, and J.-N. Hwang, “Desnownet: Context-aware deep net- work for snow removal,” IEEE Transactions on Image Processing, vol. 27, no. 6, pp. 30643073, 2018.

Z. Li, J. Zhang, Z. Fang, B. Huang, X. Jiang, Y. Gao, and J.-N. Hwang, “Single image snow removal via composition generative adversarial networks,” IEEE Access, vol. 7, pp. 2501625025, 2019.

Y. Zhan, J. Wang, J. Shi, G. Cheng, L. Yao, and W. Sun, “Distinguishing cloud and snow in satellite images via deep convolutional network,” IEEE Geoscience and Remote Sensing Letters, vol. 14, no. 10, pp. 17851789, 2017.

P. Pooyoi, P. Borwarnginn, J. H. Haga, and W. Kusakunniran, “Snow scene segmentation using cnn-based approach with transfer learn- ing,” in 2019 16th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technol- ogy (ECTI-CON). IEEE, 2019, pp. 9598.

C. Wojek, G. Dorko, A. Schulz, and B. Schiele, “Sliding-windows for rapid object class local- ization: A parallel technique,” in Joint Pat- tern Recognition Symposium. Springer, 2008, pp. 7181.

A. Rosebrock, “Sliding windows for object detec- tion with python and opencv,” PylmageSearch, Navigation, 2015.

Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436, 2015.

I. Goodfellow, Y. Bengio, and A. Courville, Deep learning. MIT Press, 2016.

E. Charniak, Introduction to deep learning. MIT Press, 2019.

A. Gulli and S. Pal, Deep Learning with Keras. Packt Publishing Ltd, 2017.

K. Simonyan and A. Zisserman, “Very deep con- volutional networks for large-scale image recog- nition,” arXiv preprint arXiv:1409.1556, 2014.

A. Canziani, A. Paszke, and E. Culurciello, “An analysis of deep neural network mod- els for practical applications,” arXiv preprint arXiv:1605.07678, 2016.

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in neural infor- mation processing systems, 2012, pp. 10971105.

F. N. Iandola, S. Han, M. W. Moskewicz, K. Ashraf, W. J. Dally, and K. Keutzer, “Squeezenet: Alexnet-level accuracy with 50x fewer parameters and < 0.5 mb model size,” arXiv preprint arXiv:1602.07360, 2016.

C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the inception architec- ture for computer vision,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 28182826.

X. Xia, C. Xu, and B. Nan, “Inception-v3 for flower classification,” in 2017 2nd International Conference on Image, Vision and Computing (ICIVC). IEEE, 2017, pp. 783787.

M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, “Mobilenetv2: Inverted resid- uals and linear bottlenecks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 45104520.

S. Chen, Y. Liu, X. Gao, and Z. Han, “Mobile- facenets: Efficient cnns for accurate real-time face verification on mobile devices,” in Chinese Conference on Biometric Recognition. Springer, 2018, pp. 428438.

B. Q. Huynh, H. Li, and M. L. Giger, “Digital mammographic tumor classification using trans- fer learning from deep convolutional neural net- works,” Journal of Medical Imaging, vol. 3, no. 3, p. 034501, 2016.

W. Kusakunniran, Q. Wu, P. Ritthipravat, and J. Zhang, “Hard exudates segmentation based on learned initial seeds and iterative graph cut,” Computer methods and programs in biomedicine, vol. 158, pp. 173183, 2018.