NU-LiteNet: Mobile Landmark Recognition using Convolutional Neural Networks Mobile Landmark Recognition using Convolutional Neural Networks

Main Article Content

Abstract

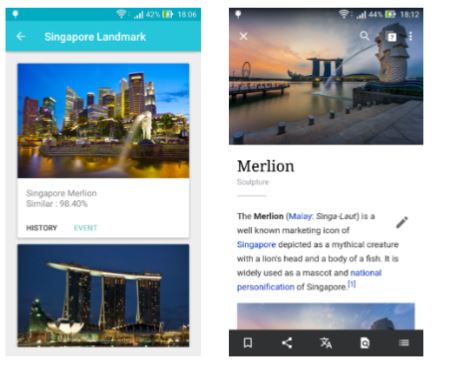

The growth of high-performance mobile devices has resulted in more research into on-device image recognition. The research problems have been the latency and accuracy of automatic recognition, which remain as obstacles to its real-world usage. Although the recently developed deep neural networks can achieve accuracy comparable to that of a human user, some of them are still too slow. This paper describes the development of the architecture of a new convolutional neural network model, NU-LiteNet. For this, SqueezeNet was developed to reduce the model size to a degree suitable for smartphones. The model size of NU-LiteNet was therefore 2.6 times smaller than that of SqueezeNet. The model outperformed other Convolutional Neural Network (CNN) models for mobile devices (eg. SqueezeNet and MobileNet) with an accuracy of 81.15% and 69.58% on Singapore and Paris landmark datasets respectively. The shortest execution time of 0.7 seconds per image was recorded with NU-LiteNet on mobile phones.

Article Details

References

Kiatchai Banlupholsakul, Jirarat Ieamsaard, and Paisarn Muneesawang. Re-ranking approach to mobile landmark recognition. In Computer Science and Engineering Conference (ICSEC), 2014 International, pages 251{254. IEEE, 2014.

Kim-Hui Yap, Zhen Li, Da-Jiang Zhang, and Zhan-Ke Ng. E

cient mobile landmark recog- nition based on saliency-aware scalable vocabu- lary tree. In Proceedings of the 20th ACM inter- national conference on Multimedia, pages 1001{ 1004. ACM, 2012.

Tao Chen, Kim-Hui Yap, and Dajiang Zhang. Discriminative soft bag-of-visual phrase for mo- bile landmark recognition. IEEE Transactions on Multimedia, 16(3):612{622, 2014.

Tao Chen and Kim-Hui Yap. Discriminative bow framework for mobile landmark recognition. IEEE transactions on cybernetics, 44(5):695{ 706, 2014.

Tao Chen and Kim-Hui Yap. Context-aware discriminative vocabulary learning for mobile landmark recognition. IEEE Transactions on Circuits and Systems for Video Technology, 23(9):1611{1621, 2013.

Jiuwen Cao, Tao Chen, and Jiayuan Fan. Fast online learning algorithm for landmark recogni- tion based on bow framework. In Industrial Elec- tronics and Applications (ICIEA), 2014 IEEE 9th Conference on, pages 1163{1168. IEEE, 2014.

Jiuwen Cao, Tao Chen, and Jiayuan Fan. Land- mark recognition with compact bow histogram and ensemble elm. Multimedia Tools and Appli- cations, 75(5):2839{2857, 2016.

Alex Krizhevsky, Ilya Sutskever, and Geo rey E Hinton. Imagenet classi cation with deep con- volutional neural networks. In Advances in neu- ral information processing systems, pages 1097{ 1105, 2012.

Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Du- mitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE Conference on Com- puter Vision and Pattern Recognition, pages 1{9, 2015.

Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale im- age recognition. arXiv preprint arXiv:1409.1556, 2014.

Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recog- nition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770{778, 2016.

David J Crandall, Yunpeng Li, Stefan Lee, and Daniel P Huttenlocher. Recognizing landmarks in large-scale social image collections. In Large- Scale Visual Geo-Localization, pages 121{144. Springer, 2016.

Forrest N Iandola, Matthew W Moskewicz, Khalid Ashraf, Song Han, William J Dally, and Kurt Keutzer. Squeezenet: Alexnet-level accu- racy with 50x fewer parameters and< 1mb model size. arXiv preprint arXiv:1602.07360, 2016.

Yoonsik Kim, Insung Hwang, and Nam Ik Cho. A new convolutional network-in-network struc- ture and its applications in skin detection, se- mantic segmentation, and artifact reduction. arXiv preprint arXiv:1701.06190, 2017.

Chakkrit Termritthikun, Paisarn Muneesawang, and Surachet Kanprachar. Nu-innet: Thai food image recognition using convolutional neu- ral networks on smartphone. Journal of Telecom- munication, Electronic and Computer Engineer- ing (JTEC), 9(2-6):63{67, 2017.

Chakkrit Termritthikun and Surachet Kan- prachar. Accuracy improvement of thai food image recognition using deep convolutional neural networks. In Electrical Engineering Congress (iEECON), 2017 International, pages 1{4. IEEE, 2017.

Chakkrit Termritthikun and Surachet Kan- prachar. Nu-resnet: Deep residual networks for thai food image recognition. Journal of Telecom- munication, Electronic and Computer Engineer- ing (JTEC), 10(1-4):29{33, 2018.

Yoonsik Kim, Insung Hwang, and Nam Ik Cho. A new convolutional network-in-network struc- ture and its applications in skin detection, se- mantic segmentation, and artifact reduction. arXiv preprint arXiv:1701.06190, 2017.

James Philbin, Ondrej Chum, Michael Isard, Josef Sivic, and Andrew Zisserman. Lost in quantization: Improving particular object re- trieval in large scale image databases. In Com- puter Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on, pages 1{8. IEEE, 2008.

L eon Bottou. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT'2010, pages 177{186. Springer, 2010.

Sergey Io e and Christian Szegedy. Batch nor- malization: Accelerating deep network training by reducing internal covariate shift. In Interna- tional Conference on Machine Learning, pages 448{456, 2015.

Christian Szegedy, Sergey Io e, Vincent Van- houcke, and Alexander A Alemi. Inception-v4, inception-resnet and the impact of residual con- nections on learning. In AAAI, pages 4278{4284, 2017.

Devansh Arpit, Yingbo Zhou, Bhargava Kota, and Venu Govindaraju. Normalization propaga- tion: A parametric technique for removing inter- nal covariate shift in deep networks. In Interna- tional Conference on Machine Learning, pages 1168{1176, 2016.

Vinod Nair and Geo rey E Hinton. Recti- ed linear units improve restricted boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10), pages 807{814, 2010.

George E Dahl, Tara N Sainath, and Geof- frey E Hinton. Improving deep neural net- works for lvcsr using recti ed linear units and dropout. In Acoustics, Speech and Signal Pro- cessing (ICASSP), 2013 IEEE International Conference on, pages 8609{8613. IEEE, 2013.